The Linear Algebra Notes

Contents

- 0. Introduction

- 1. Vectors and Linear Combination

- 2. Matrices and Linear Transformation

- 3. Determinant

- 4. Equations, Inverse Matrices and Rank

- 5. Elimination with Matrices

- 6. Nonsquare Matrices, Dot Products, Cross Products and Solving System of Linear Equations

- 7. Cramer’s Rule

- 8. Change of Basis

- 9. Eigenvectors, Eigenvalues and Diagonalization

- 10. Abstract Vector Spaces

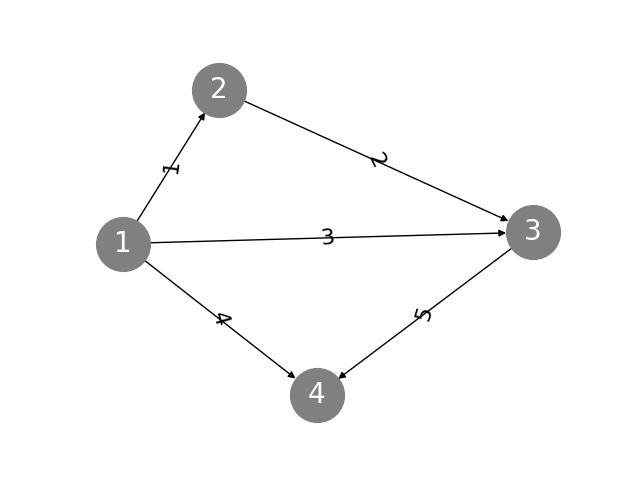

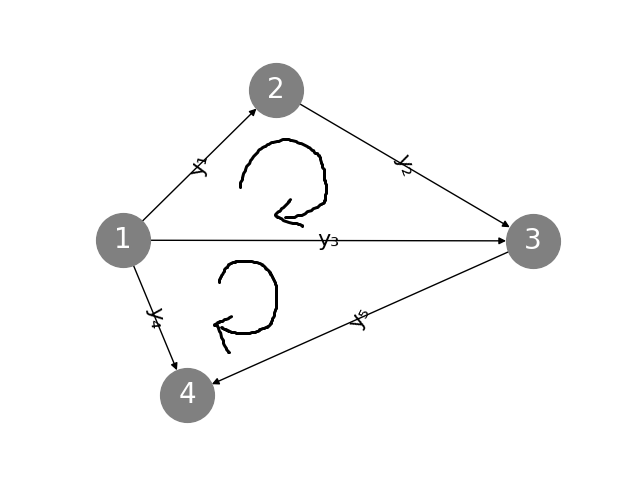

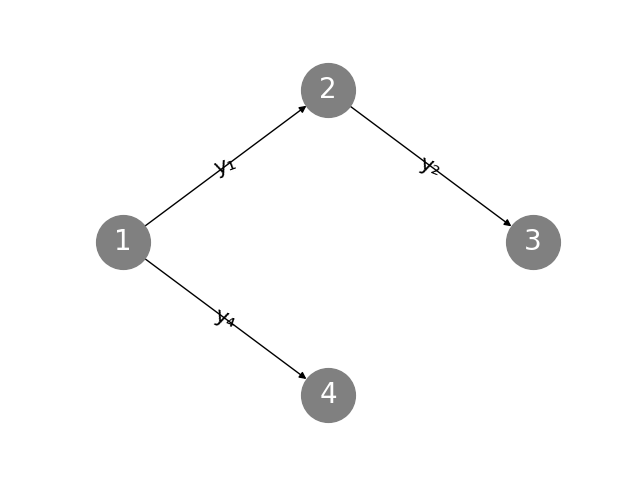

- 11. Graphs and Networks

- 12. Orthogonal Vectors and Matrices

- 13. Properties of Determinants

- 14. Difference Equations, Differential Equations (ODE) and exp(At)

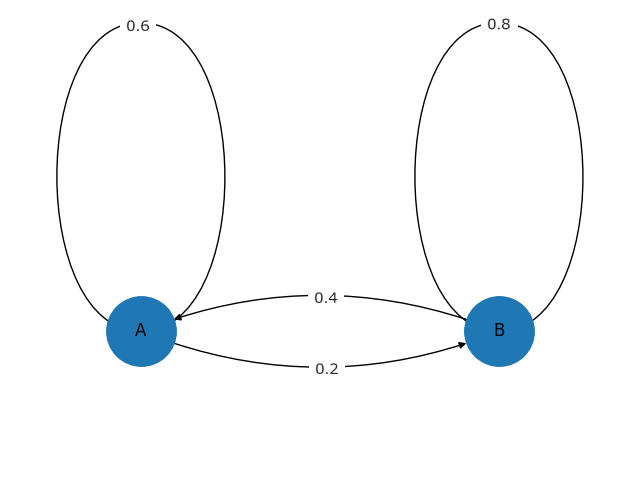

- 15. Markov Matrices and Fourier Series

- 16. Complex Vectors, Complex Matrices and Fast Fourier Transform (FFT)

- 17. Symmetric Matrices and Positive Definiteness

- 18. Similar Matrices and Jordan Form

- 19. Singular Value Decomposition (SVD)

- 20. Left and Right Inverses; Pseudoinverse

- 98. Proofs of Some Properties

- 99. Terminologies in Different Languages

0. Introduction

This page is about the note of linear algebra. Myriads of students focus too much on the numeric concept of linear algebra, but the geometrical understanding is also worth considering. So here is a linear combination note combining the lecture by MIT-1806 and The essence of linear algebra by 3B1B.

If the formulae showed in this page are not displayed correctly, please try refreshing the page. The display of formulae bases on $\LaTeX$ and the render engine is MathJax.

If you want to jump to some chapter, it’s recommended to scroll the page to the end first, or the page may be scrolled back for the finishing of image loading after jumping.

To scroll the page to the end, you can click the last chapter or press the key (Ctrl+End) on the keyboard.

The contents are not arranged sequentially from the lectures mentioned above.

What should be noticed is that this is not a serious thesis or paper. In order to make the content more comprehensible, there may be not-strict-enough expressions and even mistakes.

If there is any fatal mistake exists, please leave a comment or send an e-mail to me.

Not all images in this page is original. Some of them come from the Internet.

This article is the new version, replacing the old one written in 2022.7.9.

1. Vectors and Linear Combination

1.1 What is the vector

Vector is the bedrock of linear algebra. But what the vector really is?

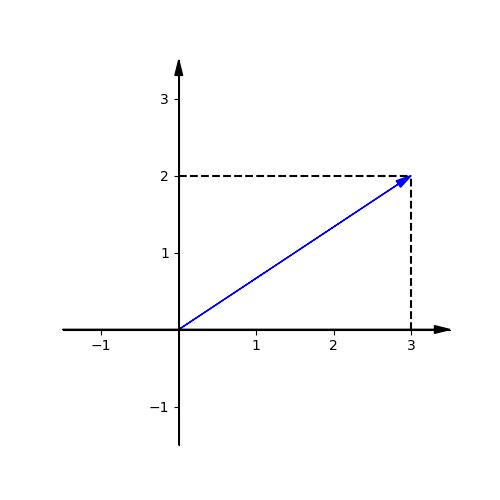

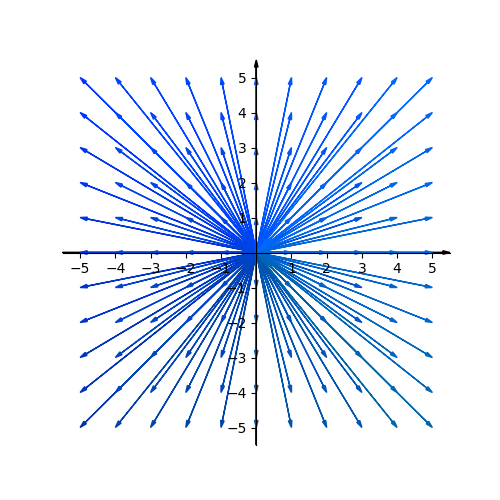

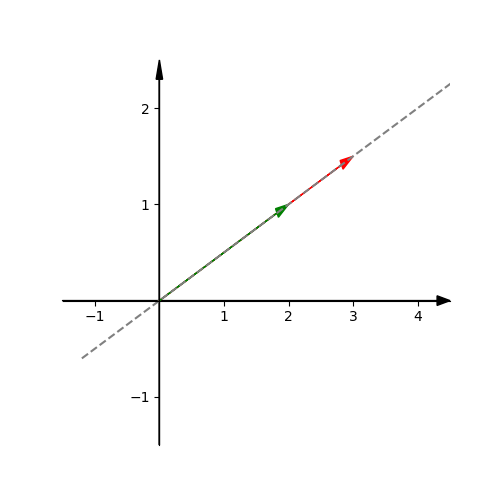

In mathematical concept, vector can be described as an arrow starts on an origin and points at one direction. The vector can be placed on anywhere, but we can fix it on the origin point of a coordinate system.

Imagine there is a 2-dimensional coordinate with two perpendicular x-axis and y-axis.

And we can describe this vector as $\mathbf{\hat v} = \begin{bmatrix} 3 \\ 2 \end{bmatrix}$. The numbers tell another point in the coordinate where the vector points at.

1.2 The addition and multiplication of vectors

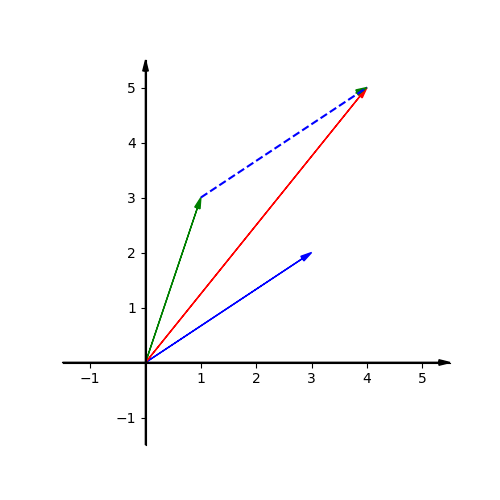

If we and two vectors together, it means move the second vector’s tail to the tip of the first one, and where the tip of the second vector sits is the sum of two vector.

If we multiply a vector by a number, it scales the vector. If the multiplier number is negative, it reverses the vector.

The numbers used to scale the vector is called scaler.

$$

\begin{equation}

a \cdot

\begin{bmatrix} x \\ y \end{bmatrix}

=

\begin{bmatrix} a \cdot x \\ a \cdot y \end{bmatrix}

\end{equation}

$$

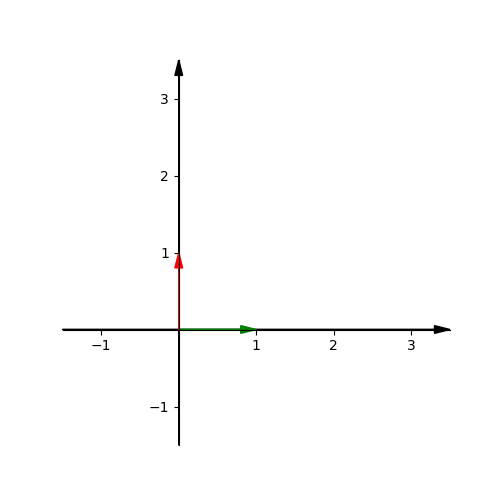

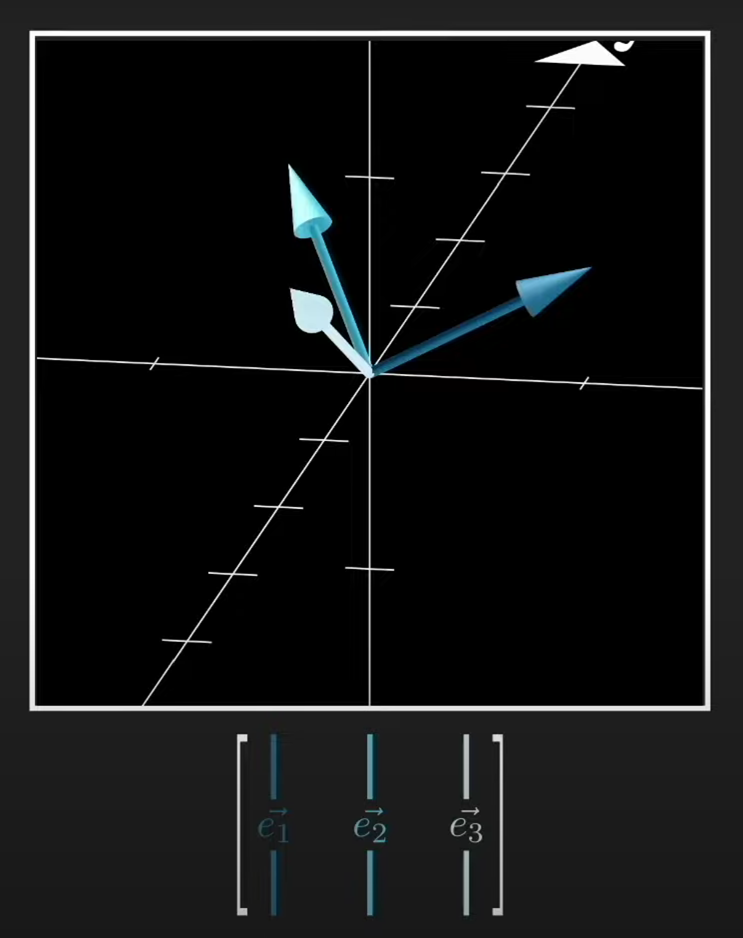

There are two special vectors $\hat i = \begin{bmatrix} 1 \\ 0 \end{bmatrix}$ and $\hat j = \begin{bmatrix} 0 \\ 1 \end{bmatrix}$, which are called unit vectors.

If we scale these two vectors and add them together, it produces a new vector. Whatever the new vector is, these must be a scaler $a$ and a scaler $b$ to produce it. That means if we draw all the vectors produced by the multiplication and addition of $\hat i$ and $\hat j$, it will fill all space in the plane. The $\hat i$ and $\hat j$ are the basis vectors of the xy coordinate.

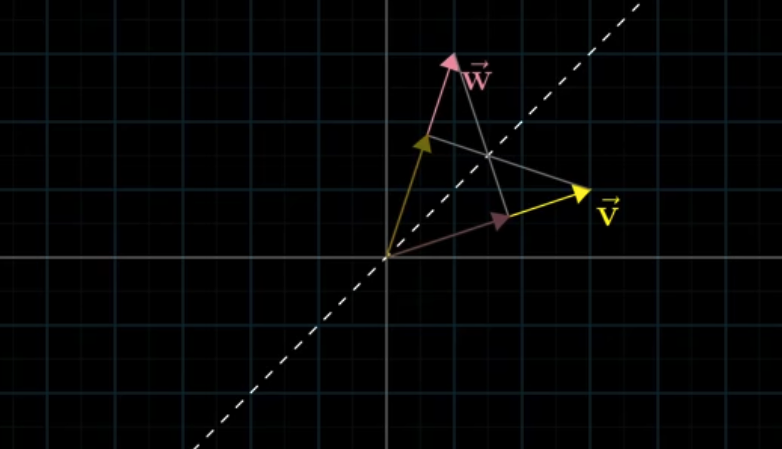

When we scale two vectors and add them together, it’s called linear combination.

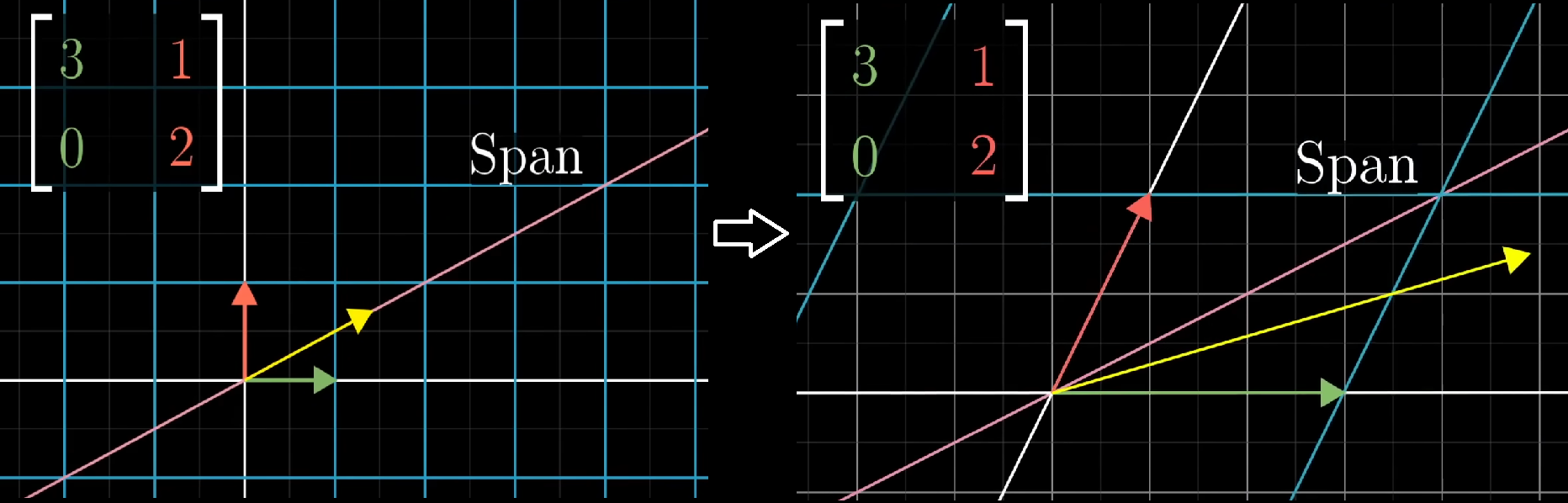

1.3 What if we can choose different basis vectors?

If we choose two random vectors and scale them and add them together, it can also produce a new vector. But if these two basis vectors are paralleled, that means two vectors are unfortunately in the same line, the new result vector will be limited in a line. If one of the original vectors is zero, the new result vector will be zero.

The set of all possible vectors that you can reach with a linear combination of a given pair of vectors is called the span of those two vectors.

So we can say, the span of most pairs of 2D vectors is all vectors of 2D space. But when they line up, the span is all vectors whose tip sit on a certain line.

If this pair of 2D vectors(not zero) $\vec u$ and $\vec v$ are line up, one of them can be scaled to produce another one. In this situation, $\vec u = a \vec v$. We say $\vec u$ and $\vec v$ are linearly dependent.

If two vectors are line up, the span has one dimension. If they are not, the span would be 2 dimensions.

In 3-dimensional space, the span of two vectors can only be a flat as possible. If we add the third vector, and when it is outside the flat of other two vectors, the span will be expanded to 3 dimensions. If each vector does add another dimension to the span, they are said to be linearly independent.

The basis of a vector space is a set of linearly independent vectors that span the full space.

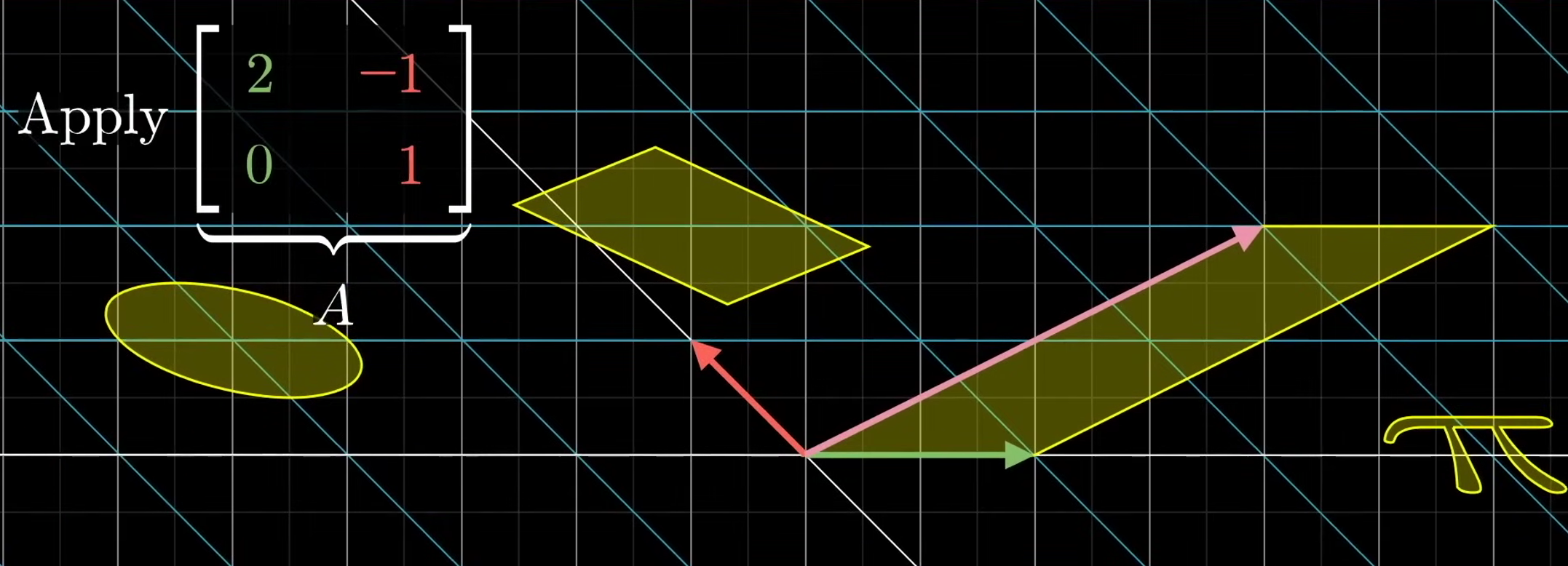

2. Matrices and Linear Transformation

2.1 What is transformation

Linear transformation is like a function. It takes in inputs and produces outputs.

$$

inputs \rightarrow f(x) \rightarrow outputs

$$

In the context of linear algebra, it takes in vector and outputs another vector.

$$

\vec u \rightarrow L(\vec v) \rightarrow \vec w

$$

Imagine there is an image consists series of points, a transformation scales, rotates, and even distorts the image.

Fortunately linear transformation won’t curve a line, that’s why it is called linear.

In a linear transformation, the line will still be a line, and the origin will not move.

If a linear transformation applied with $\hat i$, $\hat j$ and $\vec v = a\hat i + b\hat j$, there will be $\hat i_{transformed}$, $\hat j_{transformed}$ and $\vec v_{transformed} = a\hat i_{transformed} + b\hat j_{transformed}$.

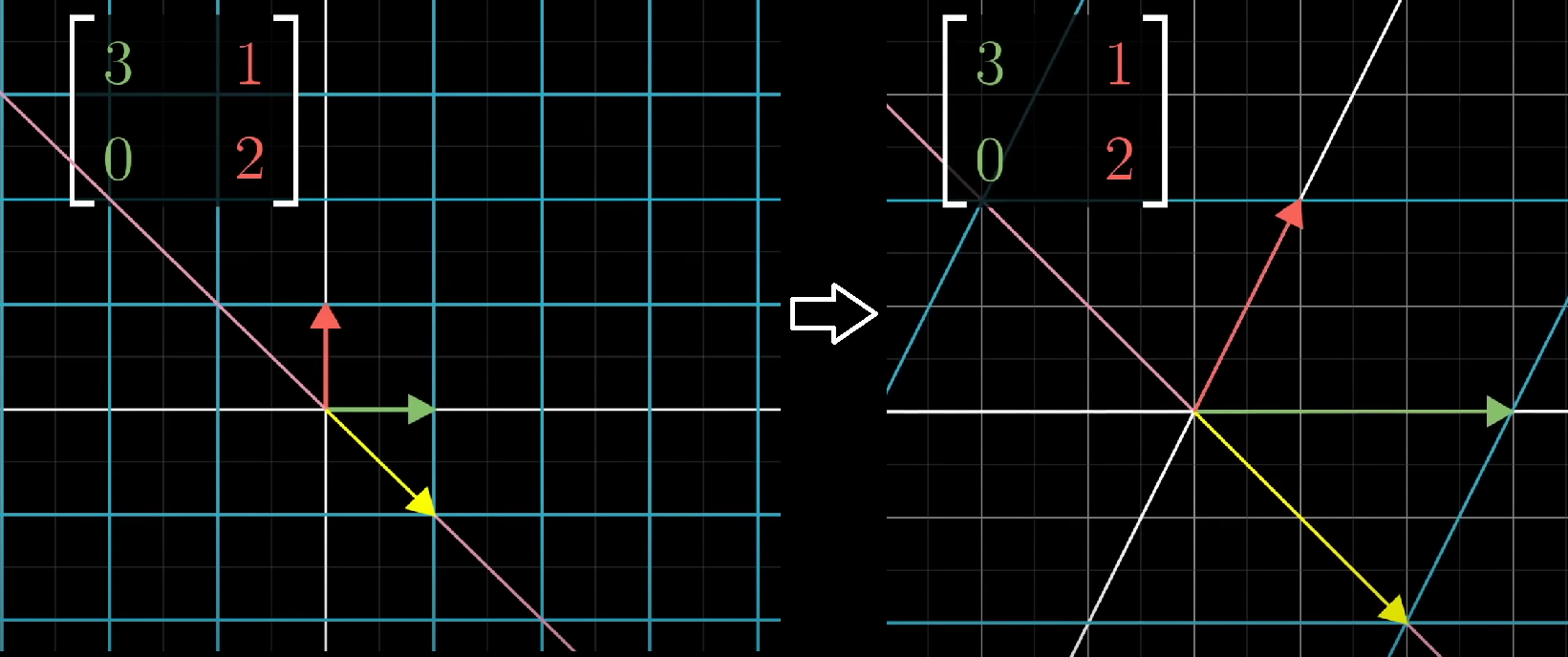

So if we transform $\hat i = \begin{bmatrix} \color{green}1 \\ \color{green}0 \end{bmatrix}$ to $\hat i_{transformed} = \begin{bmatrix} \color{green}3 \\ \color{green}-2 \end{bmatrix}$ and $\hat j = \begin{bmatrix} \color{red}0 \\ \color{red}1 \end{bmatrix}$ to $\hat j_{transformed} = \begin{bmatrix} \color{red}2 \\ \color{red}1 \end{bmatrix}$, then all the vectors produced by the two basis vectors can be easily transformed by multiplication and addition of the two basis vectors.

2.2 Matrix

The pack $\hat i_{transformed}$ and $\hat j_{transformed}$ into a 2x2 grid of numbers is called a 2x2 matrix.

$$

\begin{bmatrix}

\color{green}3 & \color{red}2 \\

\color{green}-2 & \color{red}1

\end{bmatrix}

$$

It tells where the two special basis vectors land after transformation. It also tells where the other vectors land after this transformation.

For example, $\vec v=\begin{bmatrix} \color{orange}5 \\ \color{orange}7 \end{bmatrix}$, applied with the matrix above, then

$$

\begin{equation}

\vec v_{transformed} =

\color{orange}{5} \color{black}

\begin{bmatrix}

\color{green}3 \\

\color{green}-2

\end{bmatrix} +

\color{orange}{7} \color{black}

\begin{bmatrix}

\color{red}2 \\

\color{red}1

\end{bmatrix}

\end{equation}

$$

In general cases, we put a matrix on the left of the vector to transform the vector.

$$

\begin{equation}

\begin{bmatrix}

\color{green}a & \color{red}b \\

\color{green}c & \color{red}d

\end{bmatrix}

\begin{bmatrix}

\color{orange}x \\

\color{orange}y

\end{bmatrix}

=

\color{red}{x} \color{black}

\begin{bmatrix}

\color{green}a \\

\color{green}c

\end{bmatrix} +

\color{red}{y} \color{black}

\begin{bmatrix}

\color{red}b \\

\color{red}d

\end{bmatrix} =

\begin{bmatrix}

\color{green}a\color{orange}x + \color{red}b\color{orange}y \\

\color{green}c\color{orange}x + \color{red}d\color{orange}y

\end{bmatrix}

\end{equation}

$$

For example, let’s see what happens to $

\begin{equation}

\begin{bmatrix} \color{green}3 & \color{red}1 \\ \color{green}1 & \color{red}2 \end{bmatrix}

\begin{bmatrix} \color{orange}-1 \\ \color{orange}2 \end{bmatrix} =

\begin{bmatrix} \color{orange}-1 \\ \color{orange}3 \end{bmatrix}

\end{equation}

$

See another example. If you want to rotate all of space 90 degrees counterclockwise, then $\hat i$ lands on $\begin{bmatrix} \color{green}0 \\ \color{green}1 \end{bmatrix}$ and $\hat j$ lands on $\begin{bmatrix} \color{red}-1 \\ \color{red}0 \end{bmatrix}$.

So the matrix which can do this transformation is $

\begin{bmatrix}

\color{green}0 & \color{red}-1 \\

\color{green}1 & \color{red}0

\end{bmatrix}

$

To rotate any vector 90 degrees counterclockwise, you just multiply it by this matrix.

2.3 Matrix multiplication

We may want to apply two transformations, for example, firstly rotate the plane 90 degrees counterclockwise and then apply a shear. This is called the composition of a rotation and a shear.

To do this, we multiply the vector by a rotation matrix, then multiply it by a shear. Remember, when we apply a transformation, we put the matrix on the left.

we can also multiply the vector by a composition vector.

$$

\color{pink}

\underbrace{

\begin{bmatrix}

1 & 1 \\

0 & 1

\end{bmatrix}

}_{Shear}

\color{black}\bigg(

\color{green}

\underbrace{

\begin{bmatrix}

0 & -1 \\

1 & 0

\end{bmatrix}

}_{Rotation}

\color{black}

\begin{bmatrix}

x \\

y

\end{bmatrix}

\color{black}\bigg)

=

\underbrace{

\begin{bmatrix}

1 & -1 \\

1 & 0

\end{bmatrix}

}_{Composition}

\begin{bmatrix}

x \\

y

\end{bmatrix}

$$

We call the new composition matrix is the product of the original two matrices.

$$

\color{pink}

\underbrace{

\begin{bmatrix}

1 & 1 \\

0 & 1

\end{bmatrix}

}_{Shear}

\color{green}

\underbrace{

\begin{bmatrix}

0 & -1 \\

1 & 0

\end{bmatrix}

}_{Rotation}

\color{black}

=

\underbrace{

\begin{bmatrix}

1 & -1 \\

1 & 0

\end{bmatrix}

}_{Composition}

$$

This is the multiplication of matrices. And its geometrical meaning is to apply one transformation then another.

Notice that we read the multiplication from right to left. It means we apply the transformation represented by the matrix on the right, then apply the transformation represented by the matrix on the left.

But if we change the sequence, to apply the shear first, then rotation. What will happen?

As you can see, in matrix multiplication, $AB \neq BA$.

How to calculate multiplication of matrices in numerical way? See this example:

$$

M_2M_1=

\begin{bmatrix}

0 & 2 \\

1 & 0

\end{bmatrix}

\begin{bmatrix}

1 & -2 \\

1 & 0

\end{bmatrix}

=

\begin{bmatrix}

\color{green}? & \color{red}? \\

\color{green}? & \color{red}?

\end{bmatrix}

$$

See the matrix $M_1$, it transforms $\hat i$ to $\hat i_{transformed}=\begin{bmatrix} 1 \\ 1 \end{bmatrix}$ and $\hat j$ to $\hat j_{transformed}=\begin{bmatrix} -2 \\ 0 \end{bmatrix}$.

Then $M_2$ catches up to transform the $\hat i_{transformed}$:

$$

\begin{bmatrix}

0 & 2 \\

1 & 0

\end{bmatrix}

\begin{bmatrix}

1 \\

1

\end{bmatrix}

=

\begin{bmatrix}

\color{green}2 \\

\color{green}1

\end{bmatrix}

$$

And $\hat j_{transformed}$ is also be transformed by $M_2$:

$$

\begin{bmatrix}

0 & 2 \\

1 & 0

\end{bmatrix}

\begin{bmatrix}

-2 \\

0

\end{bmatrix}

=

\begin{bmatrix}

\color{red}0 \\

\color{red}-2

\end{bmatrix}

$$

Then the composition matrix is the result combining two new vectors

$$

M_2M_1=

\begin{bmatrix}

0 & 2 \\

1 & 0

\end{bmatrix}

\begin{bmatrix}

1 & -2 \\

1 & 0

\end{bmatrix}

=

\begin{bmatrix}

\color{green}2 & \color{red}0 \\

\color{green}1 & \color{red}-2

\end{bmatrix}

$$

As you can see, the basis vectors are transformed twice then become new vectors. Then the new vectors are combined to be a new matrix telling the transformation represented by the combination of two matrices.

2.4 When are A and B allowed to be multiplied

The $m \times n$ matrix is signified as $M_{m \times n}$

We use $a_{ij}$ to describe the entry of $A$ on line i, column j.

$$

A_{\color{blue}{m}\times\color{red}{k}}

B_{\color{red}{k}\times\color{green}{n}}=

C_{\color{blue}{m}\times\color{green}{n}}

$$

That means the multiplication must be a left matrix with k columns and a right matrix with k rows.

If the left matrix has m rows, and the right matrix has n columns, the result will have m rows and n columns.

2.5 Five ways to calculate the multiplication of matrices.

2.5.1 Dot production of vector [$row_i$] and vector [$colomn_j$]

$$

\begin{bmatrix}

& & & \vdots \\

a_{i1} & a_{i2} & \cdots & a_{ik} \\

& & & \vdots

\end{bmatrix}

\begin{bmatrix}

& b_{1j} & \\

& b_{2j} & \\

& \vdots & \\

\cdots & b_{kj} & \cdots

\end{bmatrix}=

\begin{bmatrix}

& \vdots & & \\

\cdots & c_{ij} & \cdots & \\

& \vdots & &

\end{bmatrix}

$$

$$

c_{ij}=

\begin{bmatrix}a_{i1} & a_{i2} & \cdots & a_{ik}\end{bmatrix} \cdot

\begin{bmatrix}b_{1j}\\b_{2j}\\\vdots\\b_{kj}\end{bmatrix}

=a_{i1}b_{1j}+a_{i2}b_{2j}+\cdots+a_{ik}b_{kj}

=\sum^{k}_{p=1}a_{ip}b_{pj}

$$

2.5.2 Each column of C is the linear combination of A

$$

\begin{bmatrix}

A_{col_1} & A_{col_2} & \cdots & A_{col_k}

\end{bmatrix}

\begin{bmatrix}

\cdots & b_{1j} & \cdots \\

\cdots & b_{2j} & \cdots \\

\cdots & \vdots & \cdots \\

\cdots & b_{kj} & \cdots

\end{bmatrix}=

\begin{bmatrix}

\cdots & (b_{1j}A_{col_1} & b_{2j}A_{col_2} & \cdots & b_{kj}A_{col_k}) & \cdots

\end{bmatrix}

$$

2.5.3 Each row of C is the linear combination of B

$$

\begin{bmatrix}

\vdots & \vdots & \vdots & \vdots \\

a_{i1} & a_{i2} & \cdots & a_{ik} \\

\vdots & \vdots & \vdots & \vdots

\end{bmatrix}

\begin{bmatrix}

B_{row_1} \\ B_{row_2} \\ \vdots \\ B_{row_k}

\end{bmatrix}=

\begin{bmatrix}

\vdots \\

(a_{i1}B_{row_1} + a_{i2}B_{row_2} + \cdots + a_{ik}B_{row_k}) \\

\vdots \\

\end{bmatrix}

$$

2.5.4 Multiply columns of A by rows of B

$$

\begin{bmatrix}

A_{col_1} & A_{col_2} & \cdots & A_{col_k}

\end{bmatrix}

\begin{bmatrix}

B_{row_1} \\ B_{row_2} \\ \vdots \\ B_{row_k}

\end{bmatrix}=

A_{col_1}B_{row_1}+A_{col_2}B_{row_2}+\cdots+A_{col_k}B_{row_k}

$$

2.5.5 Block Multiplication

$$

\begin{bmatrix}\begin{array}{c | c}

A_1 & A_2 \\ \hline{} A_3 & A_4

\end{array}\end{bmatrix}

\begin{bmatrix}\begin{array}{c | c}

B_1 & B_2 \\ \hline{} B_3 & B_4

\end{array}\end{bmatrix}=

\begin{bmatrix}\begin{array}{c | c}

A_1B_1+A_2B_3 & A_1B_2+A_2B_4 \\ \hline{}

A_3B_1+A_4B_3 & A_3B_2+A_4B_4

\end{array}\end{bmatrix}

$$

2.6 Properties of Matrix Multiplication

- Commutative Law of Addition: $A+B=B+A$

- Associativity Law of Multiplication: $A(BC)=(AB)C$

- Left Distributive Law of Matrix Multiplication: $A(B+C)=AB+AC$

2.7 Other Basic Matrix Arithmetics And Terminologies

2.7.1 Identity Matrix

An identity matrix, sometimes called a unit matrix, is a diagonal matrix with all its diagonal entries equal to 1 , and zeroes everywhere else.

$$

I=

\begin{bmatrix}

1 & 0 & \cdots & 0 \\

0 & 1 & \cdots & 0 \\

\vdots & \vdots & \ddots & \vdots \\

0 & 0 & \cdots & 1

\end{bmatrix}

$$

After multiplying an identity matrix by a vector, it will do nothing to the vector.

2.7.2 Zero Matrix

A zero matrix is a matrix that has all its entries equal to zero.

$$

O=

\begin{bmatrix}

0 & 0 & \cdots & 0 \\

0 & 0 & \cdots & 0 \\

\vdots & \vdots & \ddots & \vdots \\

0 & 0 & \cdots & 0

\end{bmatrix}

$$

2.7.3 Diagonal Matrix

A zero matrix is a matrix whose entries are all zero except those in diagonal.

$$

D = diag(a_1,a_2,\cdots,a_n) =

\begin{bmatrix}

a_1 & 0 & \cdots & 0 \\

0 & a_2 & \cdots & 0 \\

\vdots & \vdots & \ddots & \vdots \\

0 & 0 & \cdots & a_n

\end{bmatrix}

$$

2.7.4 Addition and Subtraction

The sum (or difference) of two compatible matrices is a matrix of the same size, with each entry the sum (or difference) of the corresponding entries of the two matrices.

$$

\begin{bmatrix}

a_{11} & a_{12} & \cdots & a_{1n} \\

a_{21} & a_{22} & \cdots & a_{2n} \\

\vdots & \vdots & & \vdots \\

a_{m1} & a_{m2} & \cdots & a_{mn}

\end{bmatrix}

\pm

\begin{bmatrix}

b_{11} & b_{12} & \cdots & b_{1n} \\

b_{21} & b_{22} & \cdots & b_{2n} \\

\vdots & \vdots & & \vdots \\

b_{m1} & b_{m2} & \cdots & b_{mn}

\end{bmatrix}

=

\begin{bmatrix}

a_{11} \pm b_{11} & a_{12} \pm b_{12} & \cdots & a_{1n} \pm b_{1n} \\

a_{21} \pm b_{21} & a_{22} \pm b_{22} & \cdots & a_{2n} \pm b_{2n} \\

\vdots & \vdots & & \vdots \\

a_{m1} \pm b_{m1} & a_{m2} \pm b_{m2} & \cdots & a_{mn} \pm b_{mn}

\end{bmatrix}

$$

$$

c_{ij} = a_{ij} \pm b_{ij}

$$

2.7.5 Scalar

$$

\lambda A=

\lambda

\begin{bmatrix}

a_{11} & a_{12} & \cdots & a_{1n} \\

a_{21} & a_{22} & \cdots & a_{2n} \\

\vdots & \vdots & & \vdots \\

a_{m1} & a_{m2} & \cdots & a_{mn}

\end{bmatrix}

=

\begin{bmatrix}

\lambda a_{11} & \lambda a_{12} & \cdots & \lambda a_{1n} \\

\lambda a_{21} & \lambda a_{22} & \cdots & \lambda a_{2n} \\

\vdots & \vdots & & \vdots \\

\lambda a_{m1} & \lambda a_{m2} & \cdots & \lambda a_{mn}

\end{bmatrix}

$$

$$

-A=(-1)A=

-

\begin{bmatrix}

a_{11} & a_{12} & \cdots & a_{1n} \\

a_{21} & a_{22} & \cdots & a_{2n} \\

\vdots & \vdots & & \vdots \\

a_{m1} & a_{m2} & \cdots & a_{mn}

\end{bmatrix}

=

\begin{bmatrix}

-a_{11} & -a_{12} & \cdots & -a_{1n} \\

-a_{21} & -a_{22} & \cdots & -a_{2n} \\

\vdots & \vdots & & \vdots \\

-a_{m1} & -a_{m2} & \cdots & -a_{mn}

\end{bmatrix}

$$

Properties:

- Associativity Law: $k(lA)=(kl)A$

- Left Distributive Law: $(k+l)A=kA+kB, k(A+B)=kA+kB$

- if $kA=0$, then $k=0$ or $A=0$

- $k(AB)=(kA)B=A(kB)$

2.7.6 the 𝑘th power of 𝐴

For a square matrix $A$ and positive integer 𝑘, the 𝑘th power of $A$ is defined by multiplying this matrix by itself repeatedly.

$$

\begin{align}

& A^1=A \\

& A^2=AA \\

& A^k=\underbrace{AA \cdots A}_{k}, k \in N \\

& A^k=A^{k-1}A, k=2, 3, \cdots \\

& A^0=I

\end{align}

$$

Properties:

- $A^{k}A^{l}=A^{k+l}$

- $(A^k)^l=A^{kl}$

2.7.7 Transpose

Switch the rows and columns of the matrix, so that the first row becomes the first column, the second row becomes the second column, and so on.

$$

A=

\begin{bmatrix}

a_{11} & a_{12} & \cdots & a_{1n} \\

a_{21} & a_{22} & \cdots & a_{2n} \\

\vdots & \vdots & & \vdots \\

a_{m1} & a_{m2} & \cdots & a_{mn}

\end{bmatrix}

$$

$$

A^T=

\begin{bmatrix}

a_{11} & a_{21} & \cdots & a_{m1} \\

a_{12} & a_{22} & \cdots & a_{m2} \\

\vdots & \vdots & & \vdots \\

a_{1n} & a_{m2} & \cdots & a_{mn}

\end{bmatrix}

$$

Properties:

- $(A^T)^T=A$

- $(A+B)^T=A^T+B^T$

- $(kA^T)=kA^T$

- $(AB)^T=B^{T}A^{T}$

A symmetric matrix is a square matrix which is symmetric about its leading diagonal: $A=A^T$

On two sides of the diagonal line the entries are identical:

$$

\begin{bmatrix}

\color{red}a & \color{blue}x & \color{green}y \\

\color{blue}x & \color{red}b & \color{purple}z \\

\color{green}y & \color{purple}z & \color{red}c

\end{bmatrix}

$$

Properties of symmetric matrix:

- The sum and difference of two symmetric matrices is symmetric.

- This is not always true for the product: given symmetric matrices $A$ and $B$, then $AB$ is symmetric if and only if $A$ and $B$ commute, i.e. if $AB=BA$.

- For any integer $n$, $A^n$ is symmetric if $A$ is symmetric.

- Rank of a symmetric matrix $A$ is equal to the amount of non-zero eigenvalues of $A$.

- Given any non-square matrix A, then $AA^T$ is a symmetric matrix.

An antisymmetric (or skew-symmetric) matrix is a matrix $A$ when $A^T=-A$

Properties of antisymmetric matrix:

- If you add two antisymmetric matrices, the result is another antisymmetric matrix.

- If you multiply an antisymmetric matrix by a constant, the result is another antisymmetric matrix.

- All of the diagonal entries of an antisymmetric matrix are 0.

3. Determinant

3.1 What is determinant

When a linear transformation be applied, then space is stretched or squashed.

If there is an area applied with a linear transformation, how much is the area scaled?

To measure the factor by which the area of a given region increases or decreased, we can focus on the unit 1x1 square formed by $\hat i$ and $\hat j$.

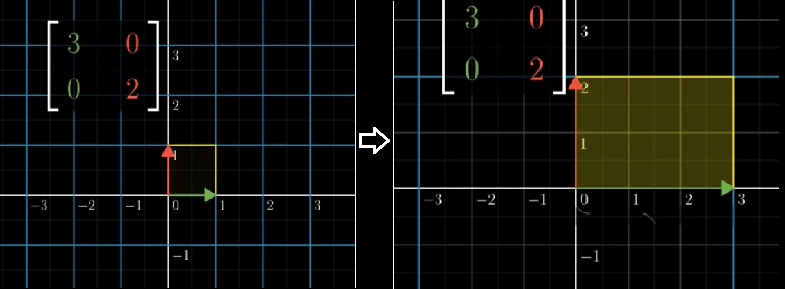

For example: $\begin{bmatrix} 3 & 0 \\ 0 & 2 \end{bmatrix}$

As you can see, the area of 1x1 square was scaled to 3x2, i.e. 6.

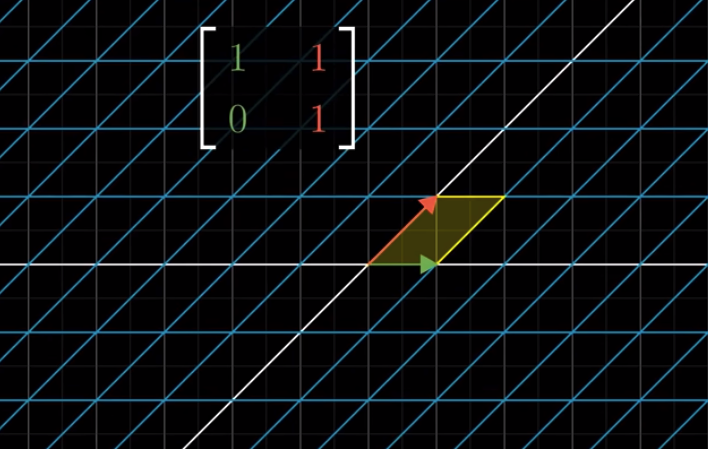

Another example: $\begin{bmatrix} 1 & 1 \\ 0 & 1 \end{bmatrix}$

It’s easy to calculate that the area of this parallelogram is still 1 after transformation.

Actually, if you know how much the area of the unit square changes, it can tell you how the area of any possible region in space changes after transformation whatever the size is. Because the transformation is linear, as it’s noted before.

This very special scaling factor is called the determinant of the transformation.

$$

det(A)=det\bigg(

\begin{bmatrix}

a_{11} & a_{12} \\

a_{21} & a_{22}

\end{bmatrix}

\bigg)=

\begin{vmatrix}

a_{11} & a_{12} \\

a_{21} & a_{22}

\end{vmatrix}

$$

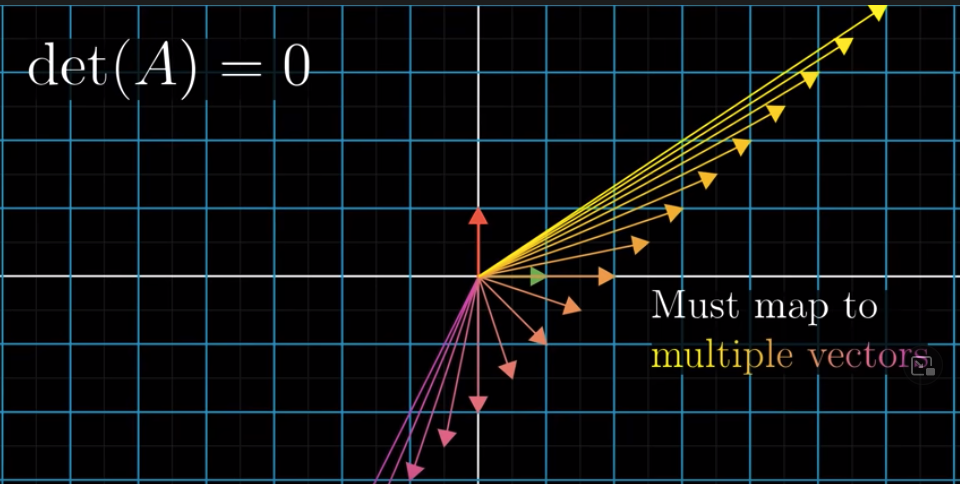

The value of the determinant tells the factor of the transformation. If the determinant is 0, it tells that all of the space are squished onto a line or even a single point. At this situation, the $\hat i_{transformed}$ and the $\hat j_{transformed}$ are linear dependent.

3.2 The sign of the determinant

But what if the determinant is negative? What’s the meaning of scaling an area by a negative number?

The sign of a determinant is all about orientation. If the determinant is negative, the transformation is like flipping the space over, or inverting the orientation of space.

Another way to think about it is that in the beginning $\hat j$ is to the left of $\hat i$. If after the transformation, $\hat j$ is on the right of $\hat i$, the orientation of space has been inverted, and the determinant will be negative.

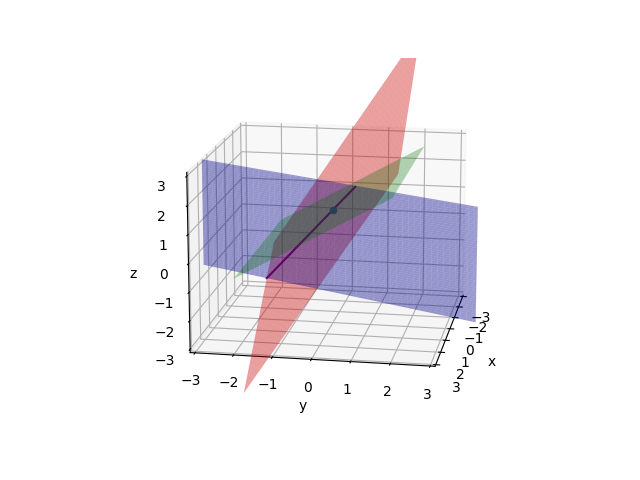

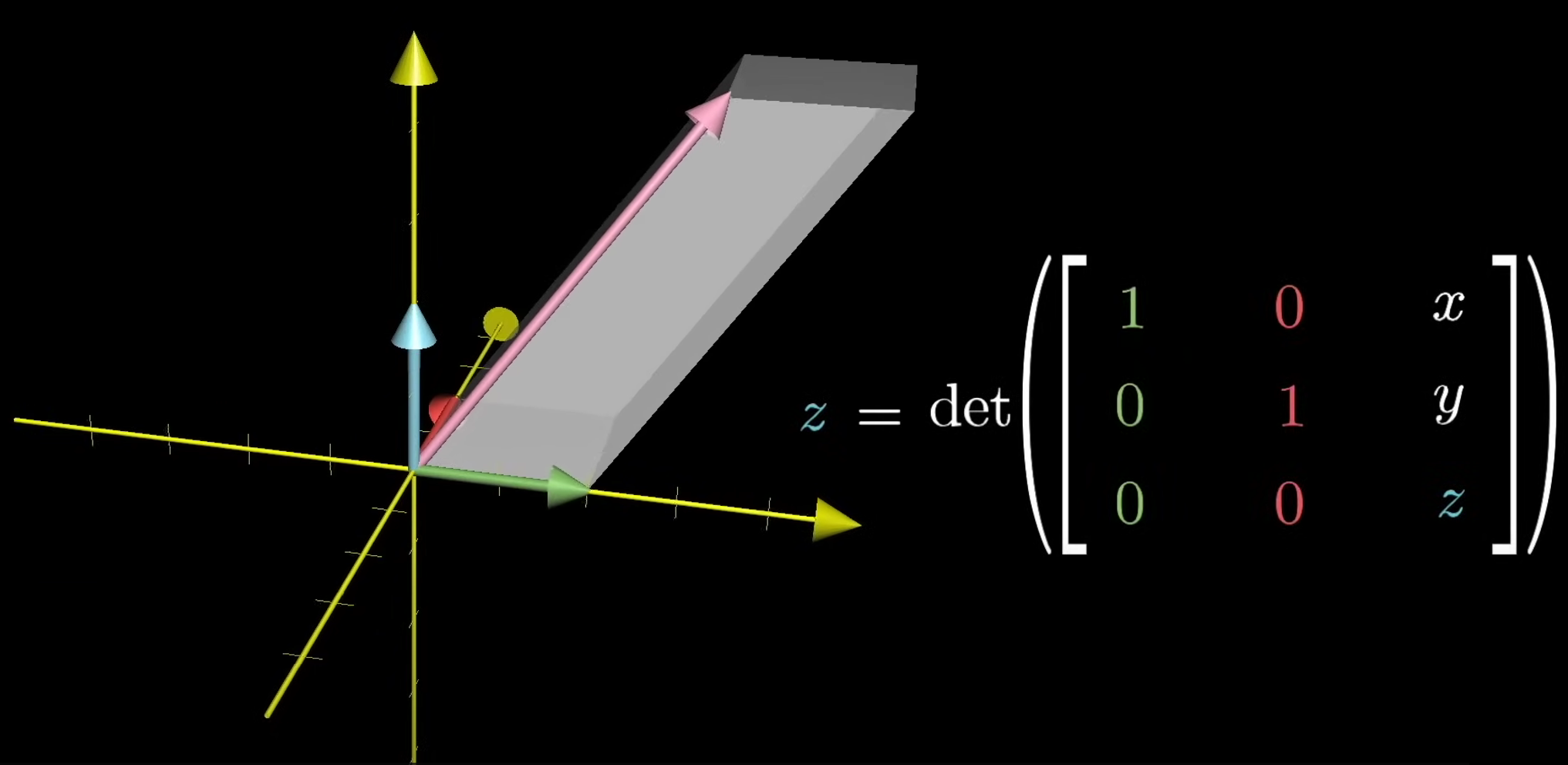

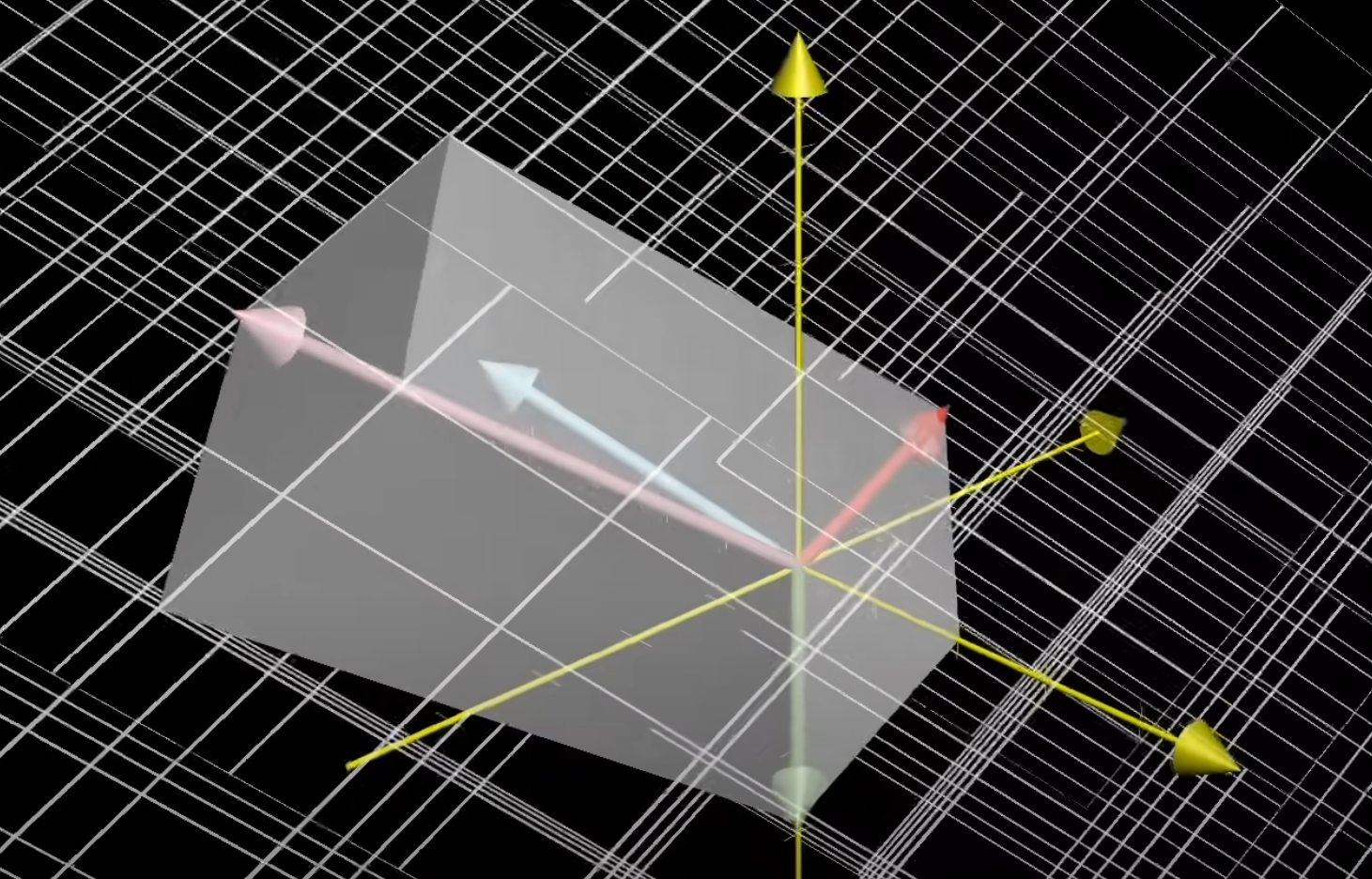

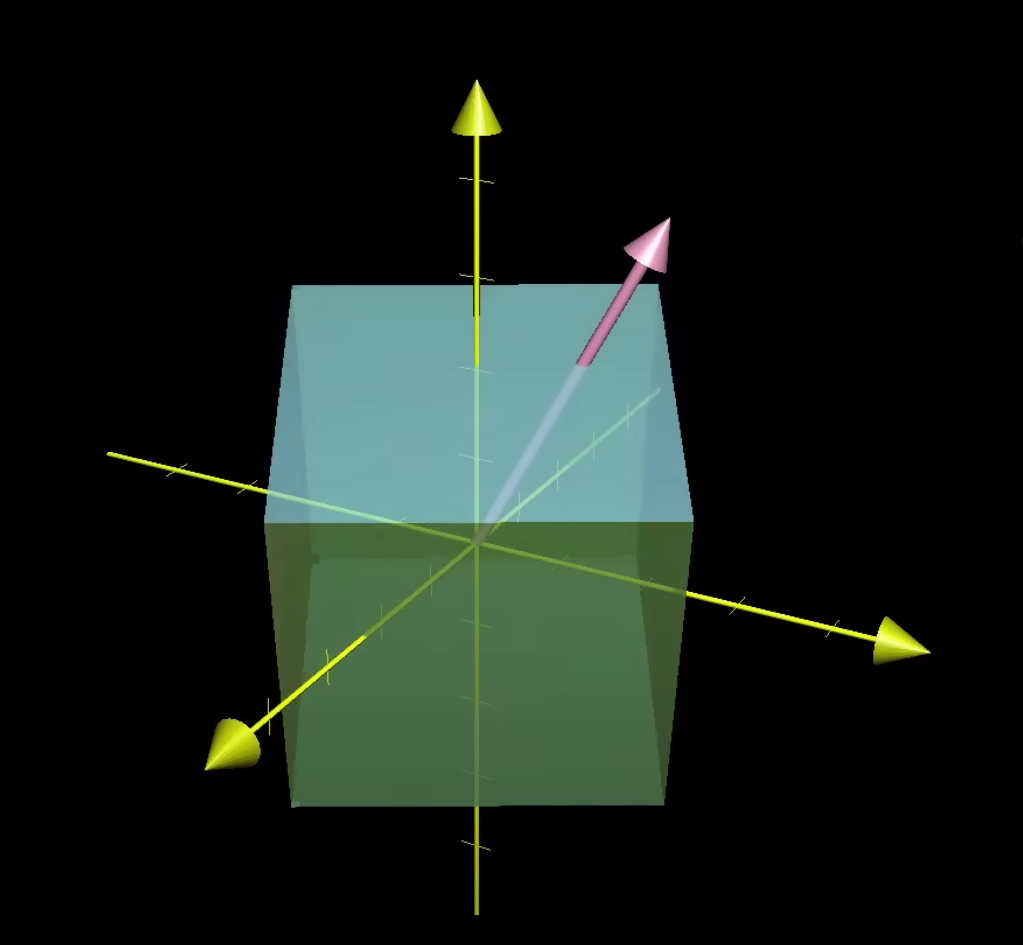

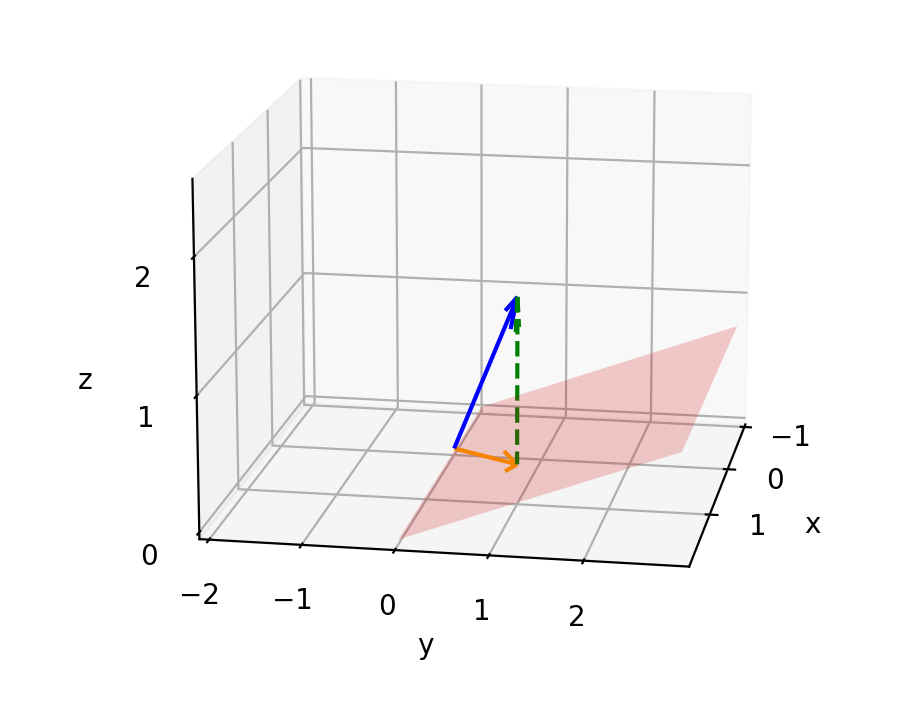

3.3 Determinant of 3D linear transformation

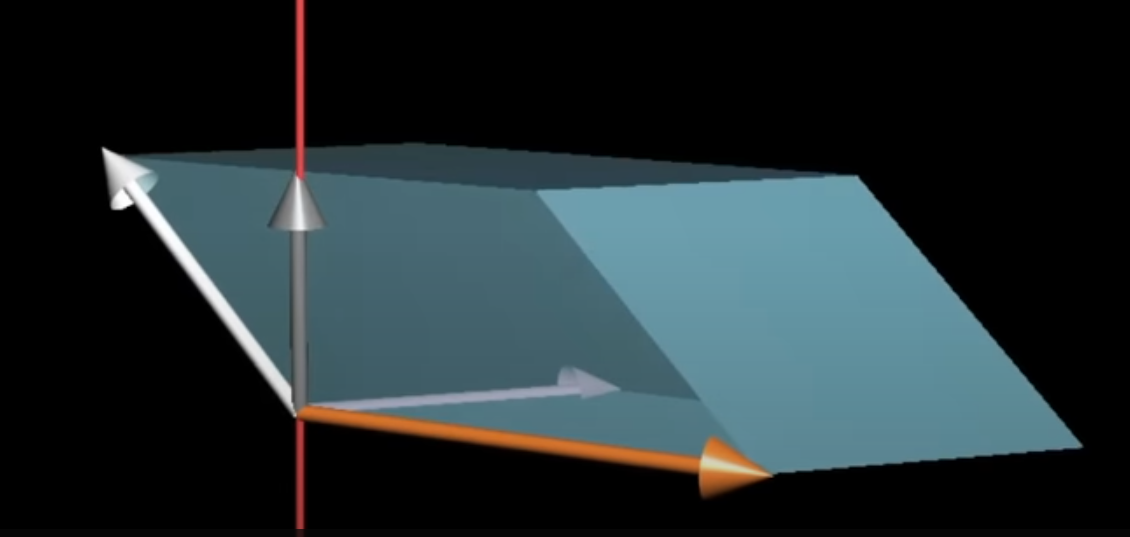

In comparison with 2D, it’s about scaling volumes in 3D linear transformation.

After transformation, the unit 1x1x1 cube will be a parallelepiped, a plane, a line or a point.

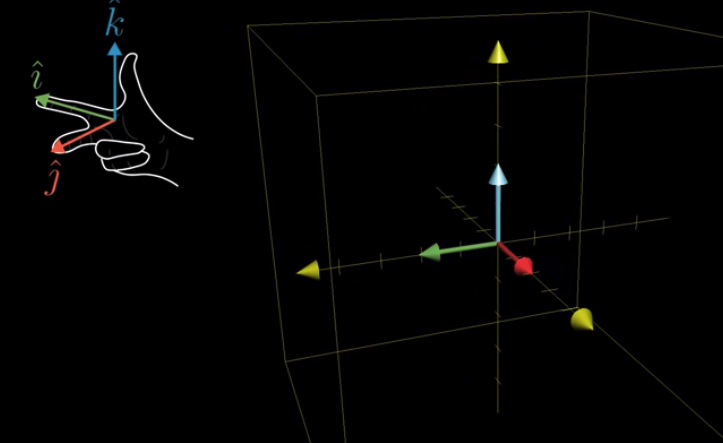

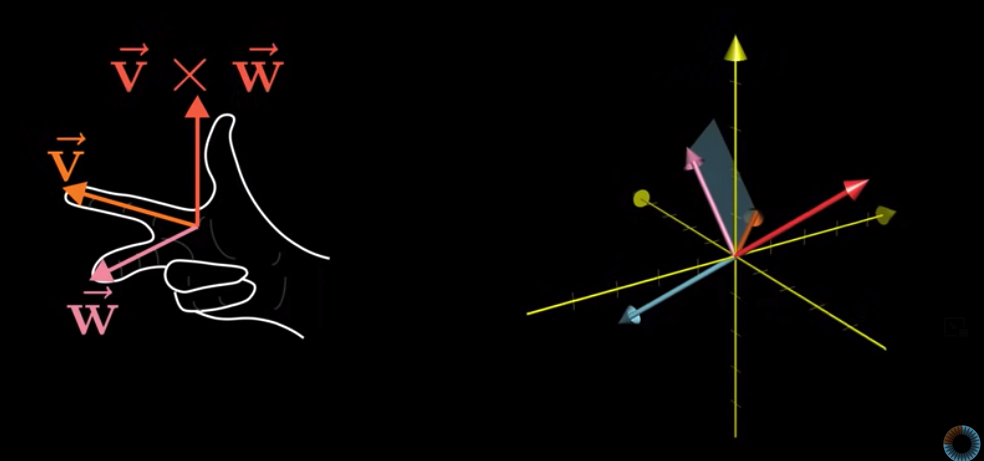

the determinant of 3D linear transformation is the scaling factor of volumes, but what’s the meaning of the sign, or how to describe the orientation?

Right-hand rule:

The image tells the orientation of positive determinant. Otherwise, it is negative.

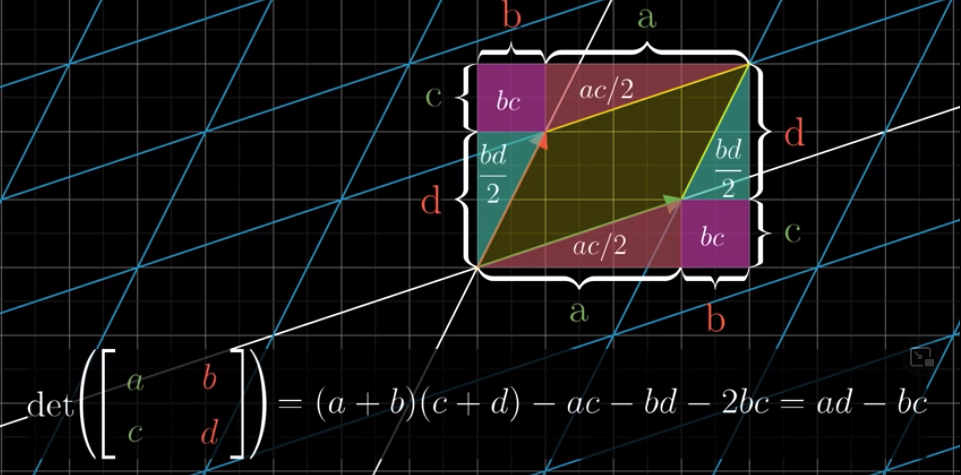

3.4 How to calculate the determinant

In 2x2 case

$$

\begin{equation}

\begin{vmatrix}

\color{green}a & \color{red}b \\

\color{green}c & \color{red}d

\end{vmatrix}

=

\color{green}a \color{red}d - \color{red}b \color{green}c

\end{equation}

$$

It’s easy to prove:

In 3x3 case

$$

\begin{equation}

\begin{vmatrix}

\color{green}a & \color{red}b & \color{blue}c \\

\color{green}d & \color{red}e & \color{blue}f \\

\color{green}g & \color{red}h & \color{blue}i

\end{vmatrix}

=

\color{green}a \color{black}

\begin{vmatrix}

\color{red}e & \color{blue}f \\

\color{red}h & \color{blue}i

\end{vmatrix}-

\color{red}b \color{black}

\begin{vmatrix}

\color{green}d & \color{blue}f \\

\color{green}g & \color{blue}i

\end{vmatrix}+

\color{blue}c \color{black}

\begin{vmatrix}

\color{green}d & \color{red}e \\

\color{green}g & \color{red}h

\end{vmatrix}

=

\color{green}a \color{red}e \color{blue}i \color{black}+ \color{red}b \color{blue}f \color{green}g \color{black}+ \color{blue}c \color{green}d \color{red}h \color{black}-

\color{green}a \color{blue}f \color{red}h \color{black}- \color{red}b \color{green}d \color{blue}i \color{black}- \color{blue}c \color{red}e \color{green}g

\end{equation}

$$

The details and tricks to calculate the determinant will be introduced in the ensuing chapters.

4. Equations, Inverse Matrices and Rank

4.1 A simple example of two equations with two unknowns

Linear algebra is useful in computer science, robotics and so forth. But one of the main reasons why linear algebra is broadly applicable is that it lets us solve certain systems of equations. And luckily this kind of equations are linear equations, which means the equations only consist of additions of unknowns scaled by some constant. No exponents or fancy functions or multiplying two variables together.

These do not exist in linear equations: $x^2$, $\sin x$, $xy$

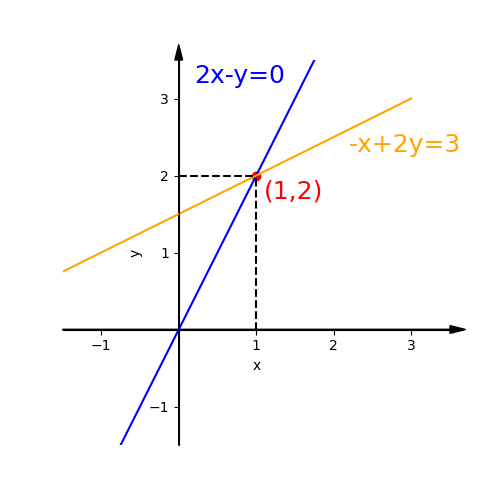

$$

\begin{cases}

\ 2x&-y&=0 \\ -x&+2y&=3

\end{cases}

$$

And as you can see, all the unknowns vertically line up on the left and the lingering constants are on the right.

And you can package all of the equations together into a single vector equation like this:

$$

\begin{equation}

\underbrace{\begin{bmatrix} 2 & -1 \\ -1 & 2 \end{bmatrix}}_{A}

\underbrace{\begin{bmatrix} x \\ y \end{bmatrix}}_{\vec X}

=

\underbrace{\begin{bmatrix} 0 \\ 3 \end{bmatrix}}_{\vec b}

\end{equation}

$$

$A$ is coefficient matrix. $\vec X$ is vector of unknowns. $\vec b$ is vector of the right-hand numbers of equations.

Using three letters to express this three matrices:

$$ A\mathbf{X}=\mathbf{b} $$

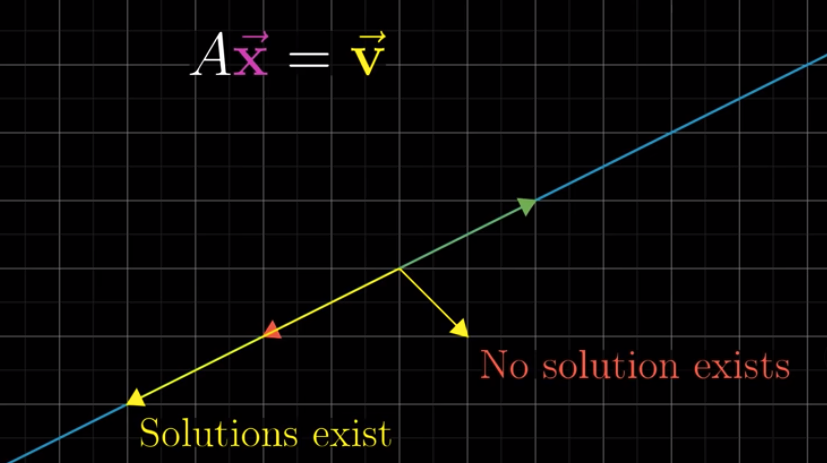

That means we should look for a vector $\vec X$, which, after applying the transformation, lands on $\vec b$.

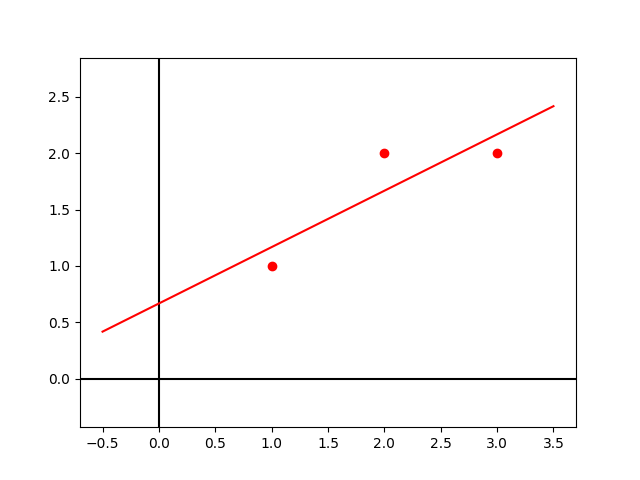

We can draw the row pictures of equations on a xy plane. Obviously They are two lines. all the points in each line are solutions of each equation.

The intersection of two line is the solution of the equations.

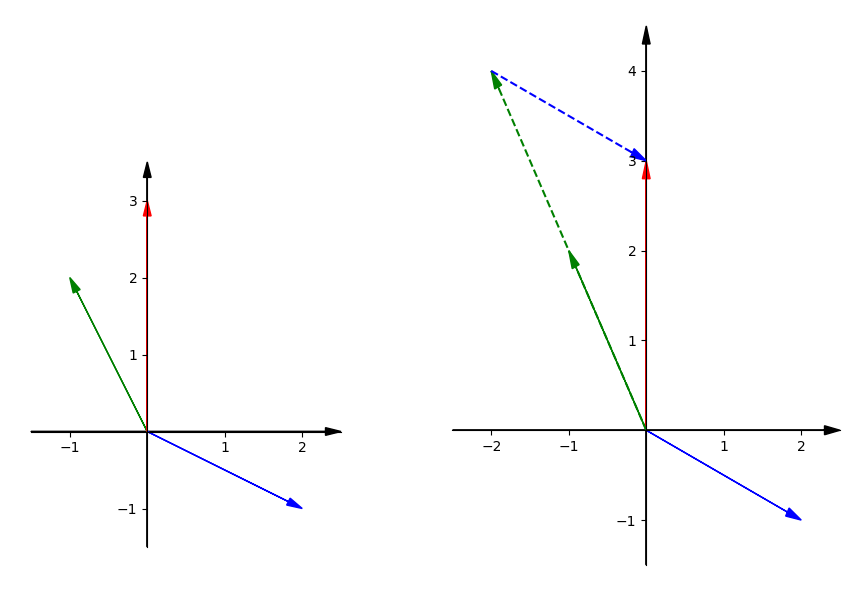

Then the column pictures:

Focusing on the columns of matrix, we can get this:

$$

\begin{equation}

x \begin{bmatrix} 2 \\ -1 \end{bmatrix} + y

\begin{bmatrix} -1 \\ 2 \end{bmatrix} =

\begin{bmatrix} 0 \\ 3 \end{bmatrix}

\end{equation}

$$

This equation is asking us to find the x and y to combine the vector $x\begin{bmatrix} 2 \\ -1 \end{bmatrix}$ and the vector $y\begin{bmatrix} -1 \\ 2 \end{bmatrix}$ to get the vector $\begin{bmatrix} 0 \\ 3 \end{bmatrix}$. That is the linear combination we need to find out.

Draw three vectors mentioned above. We already know the right x and y. So we can get the red vector when combining 2 green vectors and 1 blue vector.

4.2 Three equations with 3 unknowns

\begin{cases}

2x & -y & &= 0 \\

-x & +2y & -z &= -1 \\

& -3y & +4z &= 4

\end{cases}

We can insert zero coefficients when the unknown doesn’t show up.

$$

\begin{cases}

2\color{green}x & \color{black}-1\color{red}y & \color{black}+0\color{blue}z\color{black} & =0 \\

(-1)\color{green}x & \color{black}+2\color{red}y & \color{black}-1\color{blue}z\color{black} & =-1 \\

1\color{green}x & \color{black}-3\color{red}y & \color{black}+4\color{blue}z\color{black} & =4

\end{cases}

$$

Package all of the equations together into a single vector equation like this:

$$

\begin{equation}

\underbrace{

\begin{bmatrix}

2 & -1 & 0 \\

-1 & 2 & -1 \\

0 & -3 & 4

\end{bmatrix}

}_{A}

\underbrace{

\begin{bmatrix}

\color{green}x \\

\color{red}y \\

\color{blue}z

\end{bmatrix}

}_{\vec X}

=

\underbrace{

\begin{bmatrix}

0 \\

-1 \\

4

\end{bmatrix}

}_{\vec b}

\end{equation}

$$

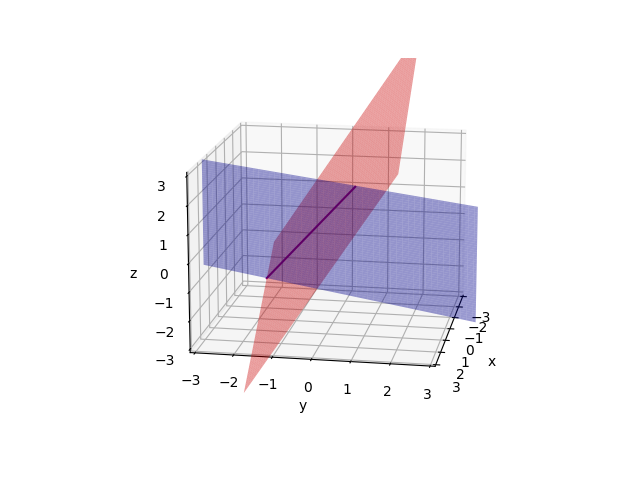

See the row pictures:

Firstly draw two planes(2x-y=0 and -x+2y-z=-1). These planes meet in a line.

Then draw the third plane(-3y+4z=4).They meet in a point(0, 0, 1). And this point is solution of equations.

This row picture is complicated. In 4x4 case or higher dimensions, the problem will be more complex, and we have no idea to draw a picture like this.

Then column pictures:

$$

\begin{equation}

x \begin{bmatrix} 2 \\ -1 \\ 0 \end{bmatrix} +

y \begin{bmatrix} -1 \\ 2 \\ -3 \end{bmatrix} +

z \begin{bmatrix} 0 \\ -1 \\ 4 \end{bmatrix} =

\begin{bmatrix} 0 \\ -1 \\ 4 \end{bmatrix}

\end{equation}

$$

Solve this equation, so we can get the linear combination of three vectors. Each vector is a three dimensions one.

Then we can get $x=0, y=0, z=1$.

If we change the $b$. For example $b = x\begin{bmatrix} 1 \\ 1 \\ -3 \end{bmatrix}$. We can also get the linear combination $x=1, y=1, z=0$.

4.3 Can Every Equation Be Solved?

See the last picture. Those three planes are not parallel or special.

Think about this:

Can I solve $A\mathbf{X}=b$ for every $b$?

If we change the x, y and z in this example, we can find that the new vector produced can point at everywhere in this space.

So in linear combination words, the problem is

Do the linear combinations of the columns fill three-dimensional space?

In this example, for the matrix $A$, the answer is yes.

Imagine that if three vectors are in the same plane, and the vector $b$ is out of this plane. Then the combination of three vectors is impossible to produce $b$. In this case, $A$ is called singular matrix. And $A$ would be not invertible.

For non-square matrix like 3x4 matrix, i.e. three equations with four unknowns, it will be introduced later.

4.4 Inverse Matrix

After applying a linear transformation $a$, if we want to undo it, we can look for a matrix $A^{-1}$ to restore the transformation.

For example, $A=\begin{bmatrix} 0 & -1 \\ 1 & 0 \end{bmatrix}$ was a counterclockwise rotation by 90 degrees, then the inverse of A would be a clockwise rotation by 90 degrees: $A^{-1}=\begin{bmatrix} 0 & 1 \\ -1 & 0 \end{bmatrix}$.

Obviously if you multiply a matrix by its inverse, it equals identity matrix. Because after applying the transformation then undo it, the final effect is that nothing happened.

$$

A^{-1}A=AA^{-1}=I

$$

When you have $A^{-1}$, you can multiply it with $A\mathbf{X}=\mathbf{b}$, then it would be:

$$

\begin{align}

A^{-1}A\mathbf{X} &= A^{-1}\mathbf{b} \\

\mathbf{X} &= A^{-1}\mathbf{b}

\end{align}

$$

But when the determinant of the matrix is zero, it squashes space into a smaller dimension. In this case, there is no inverse.

For example, there is a 2x2 matrix squashing space into a line or point. You cannot turn it back into a plane. Because it’s impossible find a linear function to map every vector on the line or point to every vector on the plane.

Properties of Inverse Matrix:

- $(A^{-1})^{-1} = A$

- $(kA)^{-1} = k^{-1}A^{-1} \text{ for any nonzero scalar }k$

- For any invertible nxn matrices A and B, $(AB)^{-1}=B^{-1}A^{-1}$

- $(A^T)^{-1}=(A^{-1})^T$

- $det(A^{-1})=det(A)$

4.5 When does inverse exist

Not all square matrices have inverses. The matrices which have inverses are called invertible or non-singular matrices.

For singular matrices, they have no inverses. And their determinant is 0. And we can find a non-zero vector $x$ with $Ax=0$.

For example, if $A=\begin{bmatrix}1 & 3 \\ 2 & 6\end{bmatrix}$, the columns of A are both on the same line. So every linear combination is on that line and is impossible to get $\begin{bmatrix}1 \\ 0\end{bmatrix}$ or $\begin{bmatrix}0 \\ 1\end{bmatrix}$. That means there is no matrix can be multiplied by $A$ to get $I$.

If $x=\begin{bmatrix}3 \\ -1\end{bmatrix}$, then $Ax=\begin{bmatrix}1 & 3 \\ 2 & 6\end{bmatrix}\begin{bmatrix}3 \\ -1\end{bmatrix}=0$.

Proof: Suppose that we can find a non-zero vector $x$ with $Ax=0$ and A is invertible, then $A^{-1}Ax=Ix=x=0$. So the assumption is false.

4.6 Rank

When the determinant of matrix is zero, for example in 2x2 matrix, it’s possible to find the solution when the solution is on the same line.

Focus on 3x3 matrix. The transformation may turn the space into a plane, a line, a point, or just stretching or squashing the space.

Rank describes the number of dimensions in all the possible outputs of a linear transformation.

For example, if applying a transformation of 3x3 matrix turns the space into a 2-dimensional plane, the rank of this matrix is 2.

If a 3-D transformation has a non-zero determinant and its output fills all of the 3D space, it has a rank of three. It does not collapse the dimension. In this case, we say the matrix has a full rank.

The set of all possible outputs of $A\vec v$ is called the column space of the matrix $A$.

Notice the zero vector($\begin{bmatrix} 0 \\ 0 \end{bmatrix}$ or $\begin{bmatrix} 0 \\ 0 \\ 0 \end{bmatrix}$…) is always included in the column space, since linear transformation must keep the origin fixed in place.

For a full rank transformation, the only vector that lands at the origin is the zero vector itself.

But for matrices that aren’t full rank, which squashed the space into a smaller dimension, there are a bunch of vectors land on zero. This set of vectors that lands on zero is called the null space or the kernel of the matrix.

In terms of the linear system of equations $A\mathbf{X}=\vec b$, if the vector $\vec b$ happens to be the zero vector, the null space gives you all of the possible solutions to the equation.

5. Elimination with Matrices

5.1 Elimination

Now let’s think about how to solve equations.

For example:

$$

\begin{cases}

x & +2y & +z &= 2 \quad &(1) \\

3x & +8y & +z &= 12 \quad &(2) \\

&\ \ 4y & +z &= 2 \quad &(3)

\end{cases}

$$

And the matrix $A=\begin{bmatrix} 1 & 2 & 1 \\ 3 & 8 & 1 \\ 0 & 4 & 1 \end{bmatrix}$.

If there is an equation, we can accept that if it multiplies a number by or add a number to both sides.

For example:

If $ x + 2 = 3y $, then $ 2 \times (x + 2) = 2 \times 3y $ has the same solution. So does $ 2 \times (x + 2) + z = 2 \times 3y + z $.

So if (1) times a number and then add to (2), it won’t change the solutions of equations. If (1) times $-3$ and then add to (2), we will get $ 2y - 2z = 6$, the $x$ is knocked out. This method is called elimination. It is a common way to solve systems of equations.

If speaking in matrix words, this matrix operation is:

$$

\begin{bmatrix} \boxed{\color{red}{1}} & 2 & 1 \\ 3 & 8 & 1 \\ 0 & 4 & 1 \end{bmatrix}

\xrightarrow{row_2 \ - \ 3row_1}

\begin{bmatrix} \boxed{\color{red}{1}} & 2 & 1 \\ 0 & 2 & -2 \\ 0 & 4 & 1 \end{bmatrix}

$$

The boxed and red number 1 is the key number of this step. We call it pivot.

What about the right-hand vector $b$? Actually Matlab finishes up the left side $A$ and then go back to the right side $b$. So we can do with it later.

In (3), the coefficient of $x$ is already 0. If not, we can eliminate it in the same way.

Next step we can do it again to eliminate the $y$ in (3). In this step the second pivot is 2. Similarly, the thrid pivot is 5

$$

\begin{bmatrix} \boxed{\color{red}{1}} & 2 & 1 \\ 0 & \boxed{\color{green}{2}} & -2 \\ 0 & 4 & 1 \end{bmatrix}

\xrightarrow{row_3 \ - \ 2row_2}

\begin{bmatrix} \boxed{\color{red}{1}} & 2 & 1 \\ 0 & \boxed{\color{green}{2}} & -2 \\ 0 & 0 & \boxed{\color{blue}{5}} \end{bmatrix}

$$

In last step, we get an upper triangular matrix $U=\begin{bmatrix} 1 & 2 & 1 \\ 0 & 2 & -2 \\ 0 & 0 & 5 \end{bmatrix}$

this form is also called echelon form.

So the purpose of elimination is to get from $A$ to $U$.

Note that pivots cannot be 0.

5.2 When does elimination fail?

If the first pivot is 0, does elimination fail? No. In last example, if we exchange the position of (1) and (3), the first pivot will be 0. But the solution of equations won’t be changed. So if the pivots is 0, try exchanging rows.

If $A=\begin{bmatrix} 1 & 2 & 1 \\ 3 & 8 & 1 \\ 0 & 4 & \boxed{-4} \end{bmatrix}$, in last step we will get a matrix $\begin{bmatrix} 1 & 2 & 1 \\ 0 & 2 & -2 \\ 0 & 0 & 0 \end{bmatrix}$. Then the third pivot is 0. It means the equations have no solution. The elimination meets its failure. In this case matrix $A$ would have not been invertible.

5.3 Back substitution

Consider $b$. We can place it to the extra column of $A$ to get an augmented matrix.

$$

[A|b]=

\begin{bmatrix} \begin{array}{ccc | c}

1 & 2 & 1 & 2 \\ 3 & 8 & 1 & 12 \\ 0 & 4 & 1 & 2 \\

\end{array} \end{bmatrix}

$$

Then we do the same operations with this matrix:

$$

\begin{bmatrix} \begin{array}{ccc | c}

1 & 2 & 1 & 2 \\ 3 & 8 & 1 & 12 \\ 0 & 4 & 1 & 2 \\

\end{array} \end{bmatrix}

\xrightarrow{row_2 \ - \ 3row_1}

\begin{bmatrix} \begin{array}{ccc | c}

1 & 2 & 1 & 2 \\ 0 & 2 & -2 & 6 \\ 0 & 4 & 1 & 2 \\

\end{array} \end{bmatrix}

\xrightarrow{row_3 \ - \ 2row_2}

\begin{bmatrix} \begin{array}{ccc | c}

1 & 2 & 1 & 2 \\ 0 & 2 & -2 & 6 \\ 0 & 0 & 5 & -10 \\

\end{array} \end{bmatrix}

$$

$b$ will be a new vector $c=\begin{bmatrix} 2 \\ 6 \\ -10 \end{bmatrix}$

And we can finally get new equations:

$$

\begin{cases}

x & +2y & +z &= 2 \\

&\ \ \ 2y & -2z &= 6 \\

& &\ \ \ 5z &= -10

\end{cases}

$$

Those are the equations $ U \mathbf{X} = c $.

And we can easily figure out that $z=-2, y=1, x=2$.

5.4 Using matrices multiplication to describe the matrices operations

How to use matrix language to describe this operation?

Last lecture we have learnt how to multiply a matrix by a column vector. Here is how to multiply a row vector by a matrix.

$$\begin{equation}

\begin{bmatrix} x & y & z \end{bmatrix}

\begin{bmatrix} a & b & c \\ d & e & f \\ g & h & i \end{bmatrix} =

x \times \begin{bmatrix} a & b & c \end{bmatrix} + y \times \begin{bmatrix} e & f & g \end{bmatrix} + z \times \begin{bmatrix} h & i & j \end{bmatrix}

\end{equation}$$

This is a row operation.

So if the row vector is on the left, the matrix does row operation. If the column vector is on the right, the matrix does column operation.

If a 3x3 matrix is multipied by $I=\begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix}$, the matrix would not be changed. $AI = IA = A$. It means this matrix operation do nothing with the matrix $A$. And the matrix $I$ is called identity matrix.

Step 1: Subtract $3 \times row_1$ from $row_2$

$$

\begin{bmatrix} 1 & 0 & 0 \\ -3 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix}

\begin{bmatrix} 1 & 2 & 1 \\ 3 & 8 & 1 \\ 0 & 4 & 1 \end{bmatrix} =

\begin{bmatrix} 1 & 2 & 1 \\ 0 & 2 & -2 \\ 0 & 4 & 1 \end{bmatrix}

$$

What does this multiplication means? Look at the row 2 of the left matrix. How does it affect the second matrix?

We know $\begin{equation}

\begin{bmatrix} -3 & 1 & 0 \end{bmatrix}

\begin{bmatrix} 1 & 2 & 1 \\ 3 & 8 & 1 \\ 0 & 4 & 1 \end{bmatrix} =

-3 \times \begin{bmatrix} 1 & 2 & 1 \end{bmatrix} + 1 \times \begin{bmatrix} 3 & 8 & 1 \end{bmatrix} + 0 \times \begin{bmatrix} 0 & 4 & 1 \end{bmatrix} =

\begin{bmatrix} 0 & 2 & -2 \end{bmatrix}

\end{equation}$

So $\begin{bmatrix}-3 & 1 & 0\end{bmatrix}$ means $-3 \times row_1 + 1 \times row_2 + 0 \times row_3$ produces the second row $\begin{bmatrix}0 & 2 & -2\end{bmatrix}$ of new matrix.

And the simple matrix $E_{21}=\begin{bmatrix} 1 & 0 & 0 \\ -3 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix} $ which is used to operate the matrix $A$ is called Elementary Matrix.

$E_{21}$ means it will change the number to 0 on the position of row 2 and column 1.

Step 2: Subtract $2 \times row_2$ from $row_3$.

$$

\begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & -2 & 1 \end{bmatrix}

\begin{bmatrix} 1 & 2 & 1 \\ 0 & 2 & -2 \\ 0 & 4 & 1 \end{bmatrix} =

\begin{bmatrix} 1 & 2 & 1 \\ 0 & 2 & -2 \\ 0 & 0 & 5 \end{bmatrix}

$$

We use $E_{32}$ to represent the left matrix.

These two operations can be written as $E_{32}(E_{21}A)=U$

We use two elementary matrices to produce the matrix $U$. Can we use only one matrix to do that? The answer is yes.

$$E_{32}(E_{21}A)==(E_{32}E_{21})A=EA=U,\\

\mathrm{composition\ matrix}\ E=E_{32}E_{21}$$

This method to change the parentheses is called associative law.

The composition matrix is the multiplication of two matrices. It mixes two transformations into one. And this is the meaning of matrices multiplication.

The elementary matrix to add row(j) multiplied by a number(k) to another row(i) can be written as

$$

\begin{bmatrix}

1 & & & & & & \\

& \ddots & & & & & \\

& & 1 & \cdots & k & & \\

& & & \ddots & \vdots & & \\

& & & & 1 & & \\

& & & & & \ddots & \\

& & & & & & 1

\end{bmatrix}

$$

k is on the (i, j)

The elementary matrix to scale row(i) by k:

$$

\begin{bmatrix}

1 & & & & & & \\

& \ddots & & & & & \\

& & 1 & & & & \\

& & & k & & & \\

& & & & 1 & & \\

& & & & & \ddots & \\

& & & & & & 1

\end{bmatrix}

$$

k is on the row(i)

5.5 Permutation Matrix

Another elementary matrix that exchanges two rows is called Permutation Matrix.

For example, if we want to exchange two rows of $\begin{bmatrix} a & b \\ c & d \end{bmatrix}$, what matrix can we use?

$$

\begin{bmatrix} 0 & 1 \\ 1 & 0 \end{bmatrix}

\begin{bmatrix} a & b \\ c & d \end{bmatrix} =

\begin{bmatrix} c & d \\ a & b \end{bmatrix}

$$

How if we want to exchange two columns of matrix? Put the permutation matrix on the right.

$$

\begin{bmatrix} a & b \\ c & d \end{bmatrix}

\begin{bmatrix} 0 & 1 \\ 1 & 0 \end{bmatrix} =

\begin{bmatrix} b & a \\ d & c \end{bmatrix}

$$

According to this two examples, we know that $AB$ is not the same as $BA$. So the order of the matrix multiplication is important.

To change the row on i and j, just change the these two rows of the identity matrix. Then the modified identity matrix is the elementary matrix we need.

$$

\begin{bmatrix}

1 & & & & & & & & & & \\

& \ddots & & & & & & & & & \\

& & 1 & & & & & & & & \\

& & & 0 & & & & 1 & & & \\

& & & & 1 & & & & & & \\

& & & & & \ddots & & & & & \\

& & & & & & 1 & & & & \\

& & & 1 & & & & 0 & & & \\

& & & & & & & & 1 & & \\

& & & & & & & & & \ddots & \\

& & & & & & & & & & 1

\end{bmatrix}

$$

5.6 How to get from U back to A

See the matrix $\begin{bmatrix} 1 & 0 & 0 \\ -3 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix}$. It subtract $3 \times row_1$ from $row_2$. How to undo this operation?

We know $IA=A$. So if we can find out a matrix $E^{-1}$ so that $E^{-1}E=I$, then $E^{-1}EA=IA=A$.

The matrix $E^{-1}$ is called $E$ inverse.

$$

\begin{bmatrix} 1 & 0 & 0 \\ 3 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix}

\begin{bmatrix} 1 & 0 & 0 \\ -3 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix}=

\begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix}

$$

5.7 Using Elimination to get $A^{-1}$

$A=\begin{bmatrix}1 & 3 \\ 2 & 7\end{bmatrix}$ for example, suppose $A^{-1}=\begin{bmatrix}a & b \\ c & d\end{bmatrix}$, then $\begin{bmatrix}1 & 3 \\ 2 & 7\end{bmatrix}\begin{bmatrix}a & c \\ b & d\end{bmatrix}=\begin{bmatrix}1 & 0 \\ 0 & 1\end{bmatrix}$. We can get a system of equations:$

\begin{cases}

\begin{bmatrix}1 & 3 \\ 2 & 7\end{bmatrix}\begin{bmatrix}a \\ b\end{bmatrix}=\begin{bmatrix}1 \\ 0\end{bmatrix} \\

\begin{bmatrix}1 & 3 \\ 2 & 7\end{bmatrix}\begin{bmatrix}c \\ d\end{bmatrix}=\begin{bmatrix}0 \\ 1\end{bmatrix}

\end{cases}$

Gauss-Jordam(Solve 2 equations at once):

Stick the I to the right of A, making it an augmented matrix:$

[\color{red}A\color{black}|\color{blue}I\color{black}]=

\begin{bmatrix}\begin{array}{cc | cc}

1 & 3 & 1 & 0 \\

2 & 7 & 0 & 1

\end{array}\end{bmatrix}$.

Then transform it into $[I|E]$ by elimination.

$$

\begin{bmatrix}\begin{array}{cc | cc}

1 & 3 & 1 & 0 \\

2 & 7 & 0 & 1

\end{array}\end{bmatrix}

\xrightarrow[E_{21}]{row_2 \ - \ 2row_1}

\begin{bmatrix}\begin{array}{cc | cc}

1 & 3 & 1 & 0 \\

0 & 1 & -2 & 1

\end{array}\end{bmatrix}

\xrightarrow[E_{12}]{row_1 \ - \ 3row_2}

\begin{bmatrix}\begin{array}{cc | cc}

1 & 0 & 7 & -3 \\

0 & 1 & -2 & 1

\end{array}\end{bmatrix}

$$

See the left side. The matrix operation $E=E_{21}E_{12}$ makes $\color{red} A$ become $I$. So $E\color{red}A\color{black}=I$. And the right side $\color{blue}I$ becomes $E$ because $E\color{blue}I\color{black}=E$. Then $E=A^{-1}$.

6. Nonsquare Matrices, Dot Products, Cross Products and Solving System of Linear Equations

6.1 Non-square Matrices

Think about matrices that are not square. What’s the meaning of them? How to use them?

For example, there is a 3x2 metrix $\begin{bmatrix} 3 & 1 \\ 4 & 1 \\ 5 & 2 \end{bmatrix}$

$$

\begin{equation}

\begin{bmatrix} 3 & 1 \\ 4 & 1 \\ 5 & 2 \end{bmatrix}

\begin{bmatrix} 2 \\ 3 \end{bmatrix}

=

\begin{bmatrix} 9 \\ 11 \\ 6 \end{bmatrix}

\end{equation}

$$

If we multiply it by a 2-dimensional vector, it produces a 3-dimensional vector. The matrix tells where $\hat i$ lands and where $\hat j$ lands.

$$

\begin{align}

\color{green} \text{where } &\color{green}\hat{i} \text{ lands} \\

&\color{black}\begin{bmatrix}

\color{green} 3 & \color{red} 1 \\

\color{green} 4 & \color{red} 1 \\

\color{green} 5 & \color{red} 2

\end{bmatrix} \\

&\color{red}\text{where } \color{red}\hat{j} \text{ lands}

\end{align}

$$

Likewise, if there is a 2x3 matrix with two rows and three columns, the three columns indicate that it starts with a space with three basis vectors, and the two rows indicate that the landing spot for each of those basis vectors is described with only two coordinates, so they must be landing in two dimensions.

$$

\begin{equation}

\begin{bmatrix} 3 & 1 & 4 \\ 1 & 5 & 2 \end{bmatrix}

\begin{bmatrix} 3 \\ 1 \\ 2 \end{bmatrix}

=

\begin{bmatrix} 18 \\ 12 \end{bmatrix}

\end{equation}

$$

So it turns a 3-D vector into a 2-D vector.

6.2 Dot Products

6.2.1 Geometrical Intuition of Dot Product

If there is a 2x1 transformation matrix, it turns 2-D vector2 into one dimension. One-dimensional space is just the number line, so the matrix turns vectors into numbers.

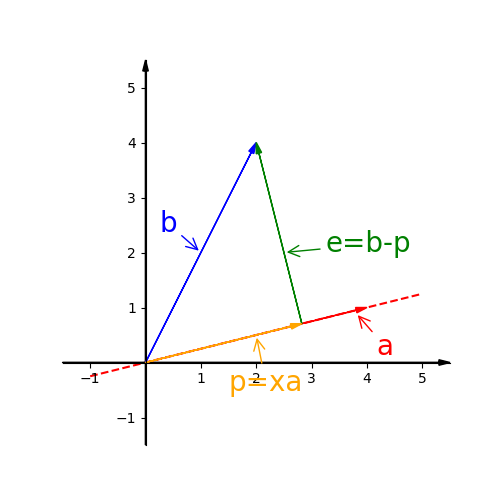

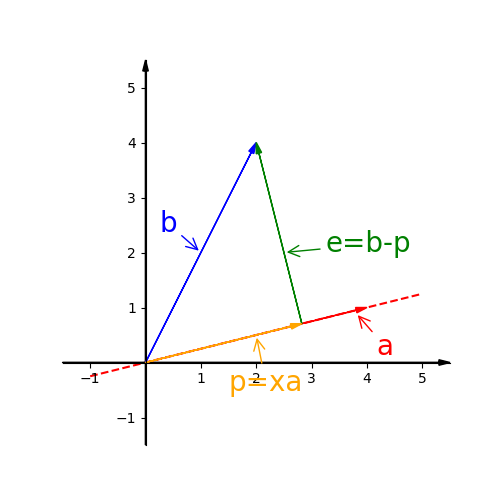

Dot product of two vectors $\vec v$, $\vec u$ is described as the multiplication between the length of $\vec u$ and the length of the vector $\vec w$. $\vec w$ is the vector $\vec u$ projecting onto $\vec v$.

If the projection of $\vec u$ is pointing in the opposite direction from $\vec v$, the dot product will be negative.

When two vectors are perpendicular, meaning the projection of one onto the other is the zero vector, their dot product is 0.

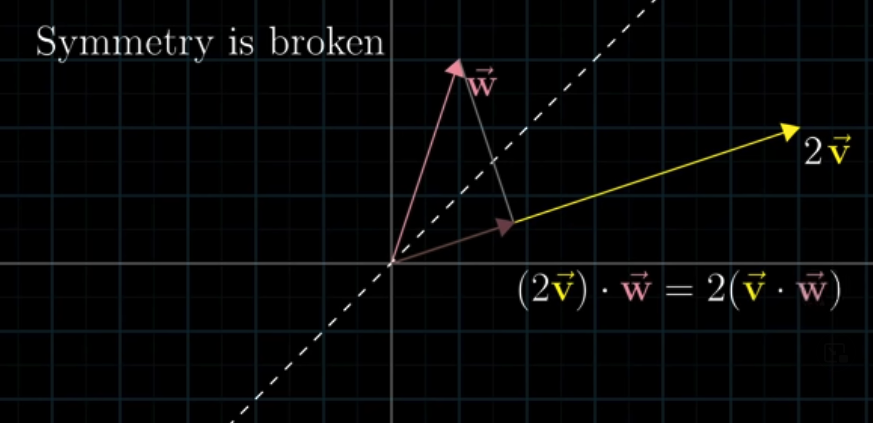

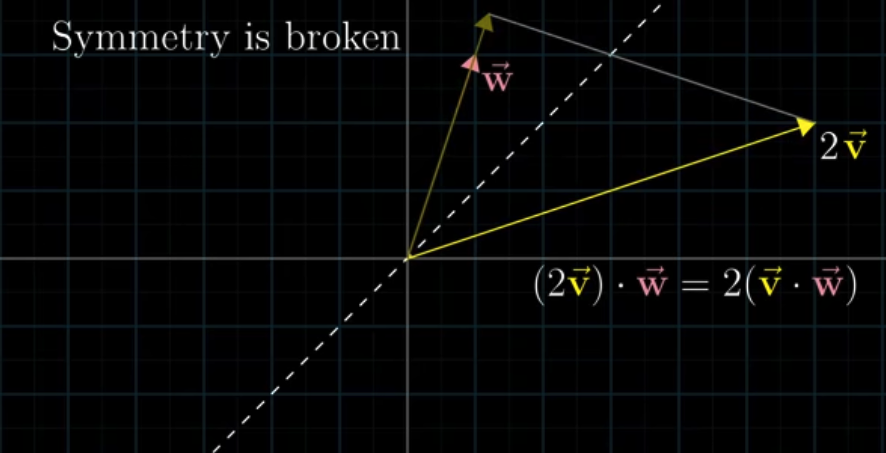

Obviously the order of $\vec u$ and $\vec v$ doesn’t affect the result: $\vec u \cdot \vec v = \vec v \cdot \vec u$

Geometrically, it can be described as symmetric, i.e. (length of projection $\vec u$)(length of $\vec v$)=(length of $\vec u$)(length of projection $\vec v$).

If there are two vectors which have the same length, they are obviously symmetric. And it’s a certainty that $\vec u \cdot \vec v = \vec v \cdot \vec u$ for symmetry.

If they are asymmetric, for example, scaling $\vec v$ by 2, the projection of $w$ won’t be changed. So the result is twice the dot product of $\vec u$ and $\vec v$.

Thinking about $\vec v$ getting projected onto $\vec w$. In this case, the length of projection gets scaled by 2. The overall effect is still to just double the dot product.

6.2.2 Numerical Computation and its Geometrical Meaning

Numerically compute:

$$

\vec u \cdot \vec v = \sum_{i=1}^n \vec u_i \vec v_i

$$

What’s the connection between this numerical process and geometrical conception?

If there is a 1x2 matrix that transforms the vector into a number:

$$

\begin{equation}

\begin{bmatrix} 1 & -2 \end{bmatrix}

\begin{bmatrix} 4 \\ 3 \end{bmatrix}

=

4 \cdot 1 + 3 \cdot (-2)

=

-2

\end{equation}

$$

This numerical operation of multiplying a 1x2 matrix by a vector feels like taking the dot product of two vectors.

The matrix tells where $\hat i$ and $\hat j$ lands onto the number line, and the vector describes the coefficient of $\hat i$ and $\hat j$. And the final 1-dimensional vector on the number line, which is a number, is the result of dot product.

6.3 Cross Products

The cross product of two vector $\vec v$ and $\vec w$ in 3-D space is defined as

$$

\begin{equation}

\begin{bmatrix} v_1 \\ v_2 \\ v_3 \end{bmatrix}

\times

\begin{bmatrix} w_1 \\ w_2 \\ w_3 \end{bmatrix}

=

\begin{bmatrix}

v_2 \cdot w_3 - w_2 \cdot v_3 \\

v_3 \cdot w_1 - w_3 \cdot v_1 \\

v_1 \cdot w_2 - w_1 \cdot v_2

\end{bmatrix}

\end{equation}

$$

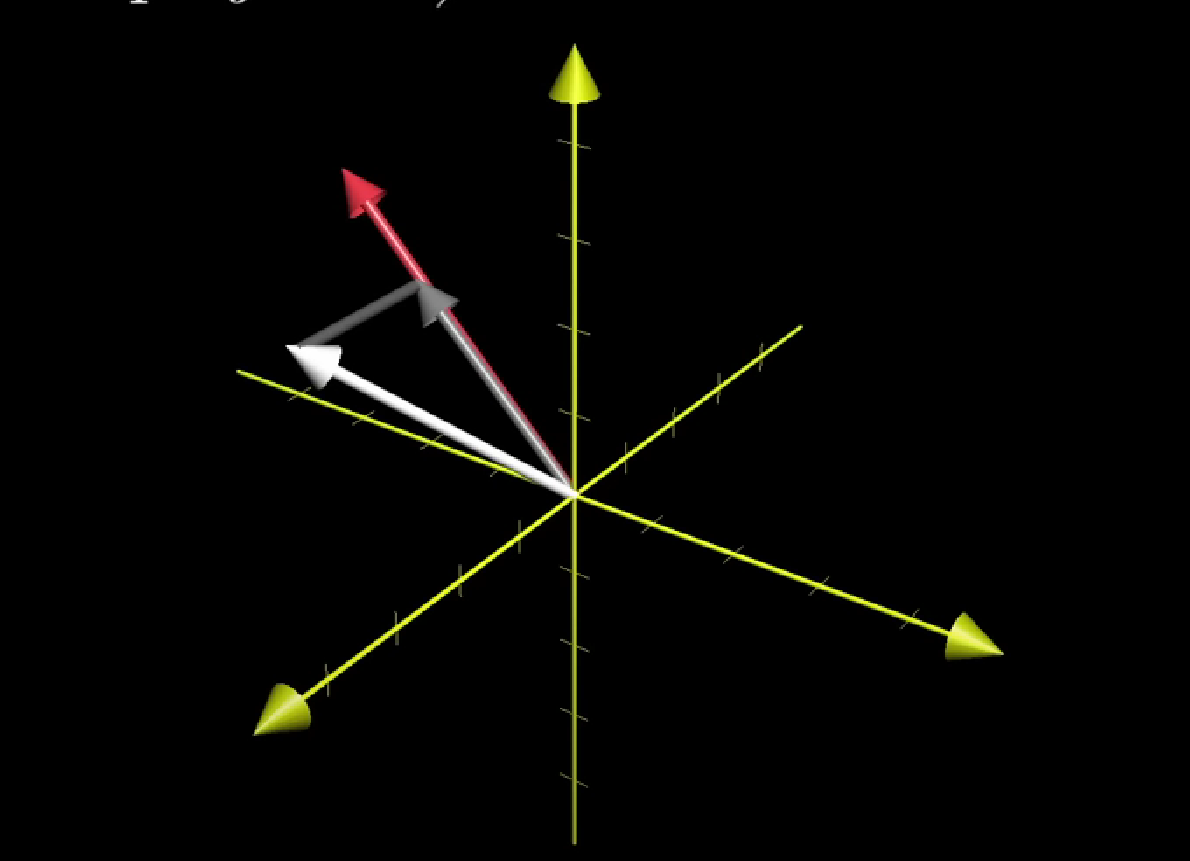

The outcome vector is perpendicular to the parallelogram spanned up by $\vec v$ and $\vec w$, and the direction follows the right-hand rule.

The length of the outcome vector is the area of the parallelogram spanned up by $\vec v$ and $\vec w$.

- $\vec v \cdot (\vec \times \vec w) = 0$

- $\vec w \cdot (\vec \times \vec w) = 0$

- $||(\vec v \times \vec w) = ||\vec v|| \times ||\vec w|| \times \sin (\theta)$

There is a notation trick:

$$

\begin{equation}

\color{red} \begin{bmatrix} v_1 \\ v_2 \\ v_3 \end{bmatrix}

\color{black} \times

\color{blue} \begin{bmatrix} w_1 \\ w_2 \\ w_3 \end{bmatrix}

\color{black}

=

\begin{vmatrix}

\hat i & \color{red} v_1 & \color{blue} w_1 \\

\hat j & \color{red} v_2 & \color{blue} w_2 \\

\hat k & \color{red} v_3 & \color{blue} w_3

\end{vmatrix}

=

\hat{i}(\color{red}v_2 \color{black}\cdot \color{blue}w_3 \color{black}- \color{blue}v_3 \color{black}\cdot \color{red}w_2) \color{black} +

\hat{j}(\color{red}v_3 \color{black}\cdot \color{blue}w_1 \color{black}- \color{blue}v_1 \color{black}\cdot \color{red}w_3) \color{black} +

\hat{k}(\color{red}v_1 \color{black}\cdot \color{blue}w_2 \color{black}- \color{blue}v_2 \color{black}\cdot \color{red}w_1)

\end{equation}

$$

6.4 Deep Dive into Cross Products

So what’s the meaning of the cross product? Why defining a vector that’s perpendicular to the plane spanned up by two vectors?

If the third vector $\vec u = \begin{bmatrix} x \\ y \\ z \end{bmatrix}$ is variable, the volume of the parallelepiped will be variable.

$$

\begin{align}

the\ volume\ of\ the\ parallelepipied &=

\begin{vmatrix}

x & \color{red}v_1 & \color{blue}w_1 \\

y & \color{red}v_2 & \color{blue}w_2 \\

z & \color{red}v_3 & \color{blue}w_3

\end{vmatrix} \\

&=

x(\color{red}v_2 \color{black}\cdot \color{blue}w_3 \color{black}- \color{blue}v_3 \color{black}\cdot \color{red}w_2) \color{black} +

y(\color{red}v_3 \color{black}\cdot \color{blue}w_1 \color{black}- \color{blue}v_1 \color{black}\cdot \color{red}w_3) \color{black} +

z(\color{red}v_1 \color{black}\cdot \color{blue}w_2 \color{black}- \color{blue}v_2 \color{black}\cdot \color{red}w_1)

\end{align}

$$

We can see the result is similar to the cross product of $\vec v$ and $\vec w$. The $x$, $y$ and $z$ are unknowns. We can pack the coefficient of each unknown into $\vec p$.

$$

\begin{align}

p_1 &= \color{red}v_2 \color{black}\cdot \color{blue}w_3 \color{black}- \color{blue}v_3 \color{black}\cdot \color{red}w_2 \\

p_2 &= \color{red}v_3 \color{black}\cdot \color{blue}w_1 \color{black}- \color{blue}v_1 \color{black}\cdot \color{red}w_3 \\

p_3 &= \color{red}v_1 \color{black}\cdot \color{blue}w_2 \color{black}- \color{blue}v_2 \color{black}\cdot \color{red}w_1 \\

\end{align}

$$

Then

$$

\begin{vmatrix}

x & \color{red}v_1 & \color{blue}w_1 \\

y & \color{red}v_2 & \color{blue}w_2 \\

z & \color{red}v_3 & \color{blue}w_3

\end{vmatrix}

=

p_1 x + p_2 y + p_3 z

$$

The result looks like dot product, so we can pack $p_1$, $p_2$ and $p_3$ into a vector:

$$

\begin{bmatrix}

p_1 \\

p_2 \\

p_3

\end{bmatrix}

\cdot

\begin{bmatrix}

x \\

y \\

z

\end{bmatrix}

=

\begin{vmatrix}

x & \color{red}v_1 & \color{blue}w_1 \\

y & \color{red}v_2 & \color{blue}w_2 \\

z & \color{red}v_3 & \color{blue}w_3

\end{vmatrix}

$$

Then the question becomes, what 3-D vector $\vec p$ has the special property, that when you take a dot product between $\vec p$ and some vector $\begin{bmatrix} x \\ y \\ z \end{bmatrix}$, it gives the same result as plugging in the vector $\begin{bmatrix} x \\ y \\ z \end{bmatrix}$ to the first column of a matrix whose other two columns have the coordinates of $\vec v$ and $\vec w$, then computing the determinant.

Geometrically, the question is, what 3-D vector $\vec p$ has the special property, that when you take a dot product between $\vec p$ and some vector $\begin{bmatrix} x \\ y \\ z \end{bmatrix}$, it gives the same result as if you took the signed volume of a parallelepiped defined by this vector $\begin{bmatrix} x \\ y \\ z \end{bmatrix}$ along with $\vec v$ and $\vec w$.

We know determinant of a 3x3 matrix, i.e. the pack of three vectors, is the volume of parallelepiped. And a dot product of two 3-D vectors is related to the projection of one vector.

The volume of a parallelepiped, is the area of the parallelogram times the height of the parallelepiped

In other words, the way our linear function works on a given vector is to project that vector onto a line that’s perpendicular to both $\vec v$ and $\vec w$, then to multiply the length of that projection by the area of the parallelogram spanned by $\vec v$ and $\vec w$.

But it’s the same thing as taking a dot product between $\begin{bmatrix} x \\ y \\ z \end{bmatrix}$ and a vector that’s perpendicular to $\vec v$ and $\vec w$ with a length equal to the area of parallelogram.

$$

\begin{align}

& (\text{area of parallelogram})\times(\text{the height})=(\begin{bmatrix} x \\ y \\ z \end{bmatrix} \cdot \vec p) \\

& \vec p \text{ is perpendicular to } \vec v \text{ and } \vec w \text{, with a length equals to that parallelogram}

\end{align}

$$

This is the fundamental reason why the computation and the geometric interpretation of the cross product are related.

6.5 Mixed Products

Mixed product of three vectors

$$

(\vec a, \vec b, \vec c) = (\vec a \times \vec b) \cdot \vec c =

\begin{vmatrix}

a_1 & b_1 & c_1 \\

a_2 & b_2 & c_2 \\

a_3 & b_3 & c_3

\end{vmatrix}

$$

The number of mixed product represents the signed volume of the parallelepiped spanned by three vectors $\vec a$, $\vec b$ and $\vec c$.

If the mixed product is zero, meaning the volume of that parallelepiped is zero, three vectors $\vec a$, $\vec b$ and $\vec c$ lie on the same plane.

6.6 Solving System of Linear Equations

6.6.1 Solving $Ax=0$

The systems of equations like $Ax=0$ are called homogeneous system of linear equations.

For example,

$$

\begin{cases}

x_1 &+ 2x_2 &+ 3x_3 &= 0 \\

2x_1 &+ 4x_2 &+ 6x_3 &= 0 \\

2x_1 &+ 6x_2 &+ 8x_3 &= 0 \\

2x_1 &+ 8x_2 &+ 10x_3 &= 0

\end{cases}

$$

For these equations, obviously there is a special solution $\begin{bmatrix} 0 \\ 0 \\ 0 \\ 0 \end{bmatrix}$ because all the right-hand constants b are 0. This special solution is call trivial solution.

The matrix and the eliminated version of that matrix:

$$

\begin{equation}

A=

\begin{bmatrix}

1 & 2 & 2 \\

2 & 4 & 6 \\

2 & 6 & 8 \\

2 & 8 & 10

\end{bmatrix}

\xrightarrow{eliminate}

\begin{bmatrix}

\boxed{1} & 2 & 3 \\

0 & \boxed{2} & 2 \\

0 & 0 & 0 \\

0 & 0 & 0

\end{bmatrix}

\end{equation}

$$

There is 2 pivot variables(boxed number $\boxed{1}$ and $\boxed{2}$). The rank of the matrix A is 2.

The columns in which pivot variables locate are called pivot columns, and other columns are called free columns. The variables in free columns are called free variables. The amount of free variables is $n-r=3-2=1$.

The equations become

$$

\begin{cases}

x_1 &+ 2x_2 &+ 3x_3 &= 0 \\

&\quad 2x_2 &+ 2x_3 &= 0 \\

\end{cases}

$$

We can let the free variable $x_3=1$, then solve the equations and get $x=\begin{bmatrix} -1 \\ -1 \\ 1 \end{bmatrix}$

If we scale the solution $x$ by a constant $c$, then $cx$ is also the solution of the equation.

So the nontrivial solution is $x=c\begin{bmatrix} -1 \\ -1 \\ 1 \end{bmatrix}$

Take another example,

$$

\begin{cases}

x_1 &+ 2x_2 &+ 2x_3 &+ 2x_4 &= 0 \\

2x_1 &+ 4x_2 &+ 6x_3 &+ 8x_4 &= 0 \\

3x_1 &+ 6x_2 &+ 8x_3 &+ 10x_4 &= 0

\end{cases}

$$

The matrix and the eliminated version of that matrix:

$$

\begin{equation}

A=

\begin{bmatrix}

1 & 2 & 2 & 2 \\

2 & 4 & 6 & 8 \\

3 & 6 & 8 & 10

\end{bmatrix}

\xrightarrow{eliminate}

\begin{bmatrix}

\boxed{1} & 2 & 2 & 2 \\

0 & 0 & \boxed{2} & 4 \\

0 & 0 & 0 & 0

\end{bmatrix}

\end{equation}

$$

There is 2 pivot variables(boxed number $\boxed{1}$ and $\boxed{2}$). The rank of the matrix A is 2.

In this example, the pivot columns are column 1 and 3, and the free columns are column 2 and 4.

Let one of the free variable be 1 and others be 0.

When $x_2=1$ and $x_4=0$, solve the equations, and then we can get $x=\begin{bmatrix} -2 \\ 1 \\ 0 \\ 0 \end{bmatrix}$.

When $x_2=0$ and $x_4=1$, solve the equations, and then we can get $x=\begin{bmatrix} 2 \\ 0 \\ -2 \\ 1 \end{bmatrix}$.

In each case, each $x$ times a constant $c$ is still the solution of equations.

So the solution set of the equations is the set of $x=c_1\begin{bmatrix} -2 \\ 1 \\ 0 \\ 0 \end{bmatrix}$ and $x=c_2\begin{bmatrix} 2 \\ 0 \\ -2 \\ 1 \end{bmatrix}$.

What’s more, the linear combination of the solution set is also the solution of the equations.

You may confuse that the special solution $x=c_2\begin{bmatrix} 0 \\ 1 \\ -2 \\ 1 \end{bmatrix}$ is also the solution of equations, but each solution in solution set cannot be scaled to produce it. Actually, it’s the linear combination when $c_1=1$ and $c_2=1$.

The linear combination of the two solution contains all the special solutions, including nontrivial solutions and trivial solutions(when $c_1=c_2=0$).

So the solution of the equations is $x=c_1\begin{bmatrix} -2 \\ 1 \\ 0 \\ 0 \end{bmatrix}+c_2\begin{bmatrix} 2 \\ 0 \\ -2 \\ 1 \end{bmatrix}$.

Generally, for system of equations with n unknowns(or mxn matrix), let $rank(A)=r$. Certainly $r \leqslant n$.

If $r=n$, then there is no free variable in equations. So in this case there is only a trivial solution $x=\begin{bmatrix} 0 \\ 0 \\ \vdots \\ 0 \end{bmatrix}$.

If $r<n$, then there are $(n-r)$ nontrivial equation(s)

$$

\begin{cases}

\quad\eta_1 &= c_1\begin{bmatrix} a_{11} & a_{12} & \cdots & a_{1r} & 1 & 0 & 0 & \cdots & 0 \end{bmatrix}^T \\

\quad\eta_2 &= c_2\begin{bmatrix} a_{21} & a_{22} & \cdots & a_{2r} & 0 & 1 & 0 & \cdots & 0 \end{bmatrix}^T \\

\quad\eta_3 &= c_3\begin{bmatrix} a_{31} & a_{32} & \cdots & a_{3r} & 0 & 0 & 1 & \cdots & 0 \end{bmatrix}^T \\

\quad\vdots \\

\eta_{n-r} &= c_{n-r,1}\begin{bmatrix} a_{n-r,1} & a_{n-r,2} & \cdots & a_{n-r,r} & 0 & 0 & 0 & \cdots & 1 \end{bmatrix}^T

\end{cases}

$$

Solutions $\eta_1,\ \eta_2,\ \cdots,\ \eta_n$ are linear independent. And every special solution of the equations can be described as the linear combination of them.

The solution of $Ax=0$ is also called the solution in the nullspace.

6.6.2 Non-homogeneous System of Linear Equations

For example,

$$

\begin{cases}

x_1 &+ 2x_2 &+ 2x_3 &+ 2x_4 &= b_1 \\

2x_1 &+ 4x_2 &+ 6x_3 &+ 8x_4 &= b_2 \\

3x_1 &+ 6x_2 &+ 8x_3 &+ 10x_4 &= b_3

\end{cases}

$$

The augmented matrix and elimination:

$$

\begin{equation}

\begin{bmatrix}

\begin{array}{cccc:c}

1 & 2 & 2 & 2 & b_1 \\

2 & 4 & 6 & 8 & b_2 \\

3 & 6 & 8 & 10 & b_3

\end{array}

\end{bmatrix}

\xrightarrow{eliminate}

\begin{bmatrix}

\begin{array}{cccc:c}

1 & 2 & 2 & 2 & b_1 \\

0 & 0 & 2 & 4 & b_2 - 2b_1 \\

0 & 0 & 0 & 0 & b_3 - b_2 - b_1

\end{array}

\end{bmatrix}

\end{equation}

$$

Obviously $Ax=b$ is solvable when $b_3-b_2-b_1=0$.

Generally, $Ax=b$ is solvable when b is in $C(A)$.

In other words, if a combination of the rows of A gives zero rows, then the same combination of entries of b must give 0.

In order to get the complete solution of the equations, we can get the particular solution $Ax_p=b$ and the special solution in the nullspace $Ax_n=0$, then add them together: $A(x_p+x_n)=b$.

Because $Ax_n=0$ and $Ax_p=b$, we can get $A(x_p+x_n)=Ax_p + Ax_n = b + 0 = b$.

Step 1, to get the special solution $x_n$, we let all free variables be zero, then solve the equations.

In the example above, the free variables are $x_2$ and $x_4$, we let $x_2 = x_4 = 0$. Solve the equations, we can get $x_p=\begin{bmatrix} -2 \\ 0 \\ \cfrac{3}{2} \\ 0 \end{bmatrix}$.

Step 2, get the solution in the nullspace.

Let $x_2=1$ and $x_4=0$, then solve $Ax=0$, we get $x=\begin{bmatrix} -2 \\ 1 \\ 0 \\ 0 \end{bmatrix}$.

Let $x_2=0$ and $x_4=1$, then solve $Ax=0$, we get $x=\begin{bmatrix} 2 \\ 0 \\ -2 \\ 1 \end{bmatrix}$.

Step 3, add the particular solution and special solutions together.

$$

x =

\begin{bmatrix} -2 \\ 0 \\ \cfrac{3}{2} \\ 0 \end{bmatrix} +

c_1\begin{bmatrix} -2 \\ 1 \\ 0 \\ 0 \end{bmatrix} +

c_2\begin{bmatrix} 2 \\ 0 \\ -2 \\ 1 \end{bmatrix}

$$

Since in the nullspace, no matter how the linear combination is, $Ax$ always be zero. And we get all solutions in nullspace, meaning we can reach every solution by $x_p+x_n$, so this solution of equations is the complete solution.

Generally, for system of equations with n unknowns(or mxn matrix), let $rank(A)=r$. Certainly $r \leqslant n$.

If $r=n$, then there is no free variable in equations, meaning the nullspace is only the zero vector. So there is only one special solution or no solution.

If $r<n$, we just let every free variables equal zero to get one of the particular solution. Then get the solution in nullspace, add them together to get the complete solution.

6.6.3 Solvability of $Ax=b$

If $A$ is a mxn matrix, the rank of $A$ is $r$, then we call $A$ has a full row rank if $r=m$.

When $A$ has a full row rank, $Ax=b$ is solvable for any $b$. Because when $n>m$, there are $(n-r)$ free variable(s). When $n=m$, the matrix has a full rank, so it’s solvable.

7. Cramer’s Rule

As a method to solve the linear systems of equations, Cramer’s rule is not the best way(Gaussian elimination for example will be faster). But the relationship between computation and geometry in Cramer’s rule is also worth thinking about.

Computation:

To solve the system of equations:

$$

\begin{cases}

a_{11} x + a_{12} y + a_{13} z = \color{blue}b_1 \\

a_{21} x + a_{22} y + a_{23} z = \color{blue}b_2 \\

a_{31} x + a_{32} y + a_{33} z = \color{blue}b_3

\end{cases}

$$

Matrix form:

$$

\begin{bmatrix}

a_{11} & a_{12} & a_{13} \\

a_{21} & a_{22} & a_{23} \\

a_{31} & a_{32} & a_{33}

\end{bmatrix}

\begin{bmatrix} x \\ y \\ z \end{bmatrix}

=

\begin{bmatrix} \color{blue}b_1 \\ \color{blue}b_2 \\ \color{blue}b_3 \end{bmatrix}

$$

For the matrix whose determinant is not zero:

$$

\begin{equation}

x = \cfrac{

\begin{vmatrix}

\color{blue}b_{1} & a_{12} & a_{13} \\

\color{blue}b_{2} & a_{22} & a_{23} \\

\color{blue}b_{3} & a_{32} & a_{33}

\end{vmatrix}

}{

\begin{vmatrix}

a_{11} & a_{12} & a_{13} \\

a_{21} & a_{22} & a_{23} \\

a_{31} & a_{32} & a_{33}

\end{vmatrix}

},

y = \cfrac{

\begin{vmatrix}

a_{11} & \color{blue}b_{1} & a_{13} \\

a_{21} & \color{blue}b_{2} & a_{23} \\

a_{31} & \color{blue}b_{3} & a_{33}

\end{vmatrix}

}{

\begin{vmatrix}

a_{11} & a_{12} & a_{13} \\

a_{21} & a_{22} & a_{23} \\

a_{31} & a_{32} & a_{33}

\end{vmatrix}

},

z = \cfrac{

\begin{vmatrix}

a_{11} & a_{12} & \color{blue}b_{1} \\

a_{21} & a_{22} & \color{blue}b_{2} \\

a_{31} & a_{32} & \color{blue}b_{3}

\end{vmatrix}

}{

\begin{vmatrix}

a_{11} & a_{12} & a_{13} \\

a_{21} & a_{22} & a_{23} \\

a_{31} & a_{32} & a_{33}

\end{vmatrix}

}

\end{equation}

$$

It also works for systems of larger number of unknowns and the same number of equations. But a smaller example is nicer for the sake of simplicity.

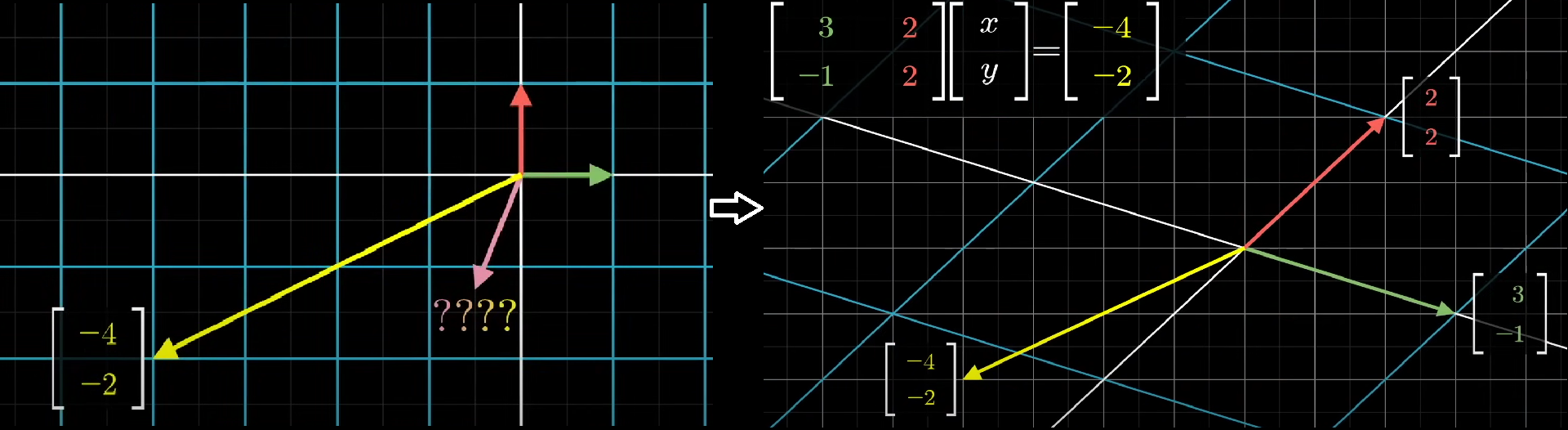

$$

\begin{bmatrix}

\color{green} 3 & \color{red}2 \\

\color{green}-1 & \color{red}2

\end{bmatrix}

\begin{bmatrix}

x \\

y

\end{bmatrix}

=

\begin{bmatrix}

\color{blue}-4 \\

\color{blue}-2

\end{bmatrix}

$$

The question is, which the input vector $\begin{bmatrix} x \\ y \end{bmatrix}$ is going to land on the output $\begin{bmatrix} \color{blue}-4 \\ \color{blue}-2 \end{bmatrix}$ after transformation.

One way to think about is to find a linear combination.

$$

x \begin{bmatrix} \color{green} 3 \\ \color{green}-1 \end{bmatrix} +

y \begin{bmatrix} \color{red} 2 \\ \color{red}2 \end{bmatrix} =

\begin{bmatrix} \color{blue} -4 \\ \color{blue}-2 \end{bmatrix}

$$

But it’s not easy to calculate when the number of unknowns and equations goes high.

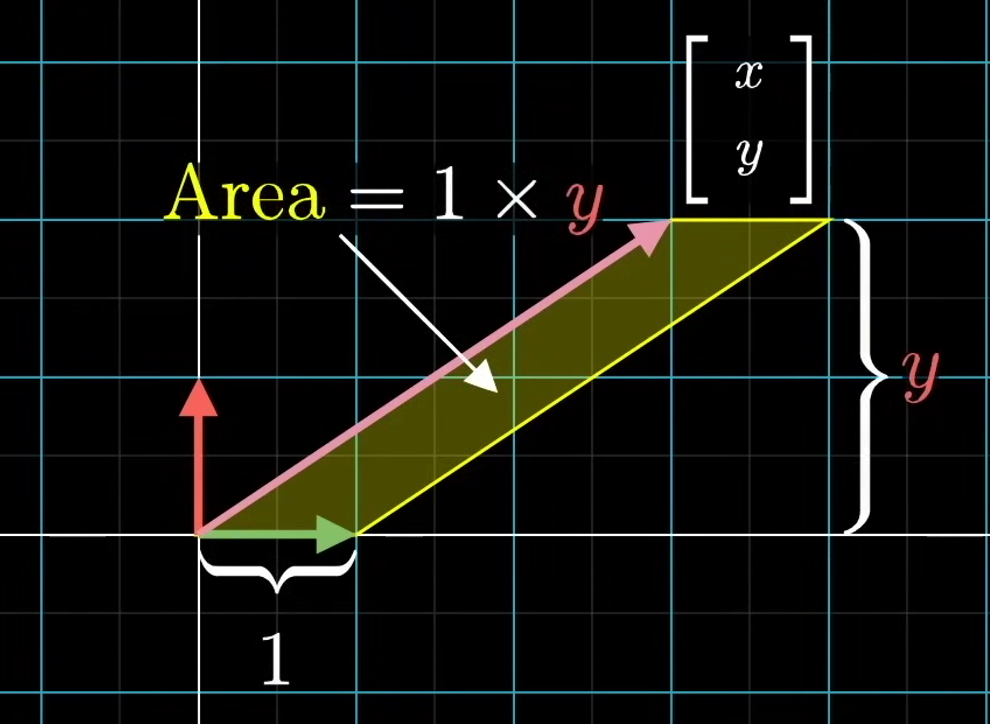

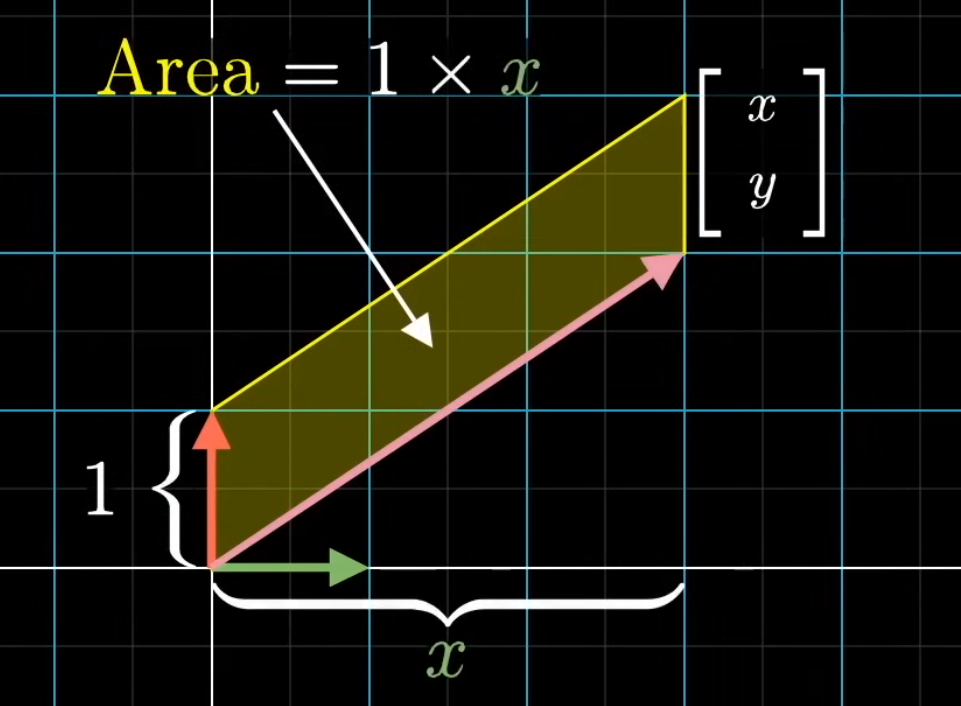

See another idea. Take the parallelogram defined by the first basis vector $\hat i$ and the unknown input vector $\begin{bmatrix} x \\ y \end{bmatrix}$. The are of the parallelogram is the base 1 times the height perpendicular to that base, which is the y-coordinate. So the area of the parallelogram is y. Notice that the area is signed. When y is negative, then the area will be negative as well.

And symmetrically, if you look at the parallelogram spanned up by the unknown vector and $\hat j$, the area will be x.

Then the question is:

$$

\begin{bmatrix} \color{green}1 & \color{red}0 \\ \color{green}0 & \color{red}1 \end{bmatrix}

\begin{bmatrix} x \\ y \end{bmatrix}

=

\begin{bmatrix} a \\ b \end{bmatrix}

$$

Then if applying with a transformation, the area of the parallelogram will be scaled up or down. Actually, all the area in the space will be scaled up or down by the same number.

So, if the original area is y, then the transformed area will be y times the determinant of the transformation matrix A: $Signed\ Area=|A|y$

We can solve for y:

$$

\begin{equation}

y = \cfrac{\text{Signed Area}}{|A|}

\end{equation}

$$

Then the question becomes

$$

\begin{bmatrix} \color{green}2 & \color{red}-1 \\ \color{green}0 & \color{red}1 \end{bmatrix}

\begin{bmatrix} 1 & 0 \\ 0 & 1 \end{bmatrix}

\begin{bmatrix} x \\ y \end{bmatrix}

=

\begin{bmatrix} \color{green}2 & \color{red}-1 \\ \color{green}0 & \color{red}1 \end{bmatrix}

\begin{bmatrix} x \\ y \end{bmatrix}

=

\begin{bmatrix} a_{transformed} \\ b_{transformed} \end{bmatrix}

$$

The $\hat i_{transformed}=\begin{bmatrix} \color{green}2 \\ \color{green}0 \end{bmatrix}$, $\hat j_{transformed}=\begin{bmatrix} \color{red}-1 \\ \color{red}1 \end{bmatrix}$

The area is the determinant of the matrix consists of two column vector $\hat i_{transformed}$ and the unknown vector. So the matrix is $\begin{bmatrix} \color{green}2 & a_{transformed} \\ \color{green}0 & b_{transformed} \end{bmatrix}$

$$

\begin{equation}

y = \cfrac{\text{Sign Area}}{|A|} =

\cfrac{\begin{vmatrix} \color{green}2 & a_{transformed} \\ \color{green}0 & b_{transformed} \end{vmatrix}}{\begin{vmatrix} \color{green}2 & \color{red}-1 \\ \color{green}0 & \color{red}1 \end{vmatrix}}

\end{equation}

$$

Likewise,

$$

\begin{equation}

x =

\cfrac{\begin{vmatrix} a_{transformed} & \color{red}-1 \\ b_{transformed} & \color{red}1 \end{vmatrix}}{\begin{vmatrix} \color{green}2 & \color{red}-1 \\ \color{green}0 & \color{red}1 \end{vmatrix}}

\end{equation}

$$

Generally, for

$$

\begin{bmatrix} \color{green}a_11 & \color{red}a_12 \\ \color{green}a_21 & \color{red}a_22 \end{bmatrix}

\begin{bmatrix} x \\ y \end{bmatrix}

=

\begin{bmatrix} b_1 \\ b_2 \end{bmatrix}

$$

The solutions are

$$

\begin{equation}

\begin{cases}

x = \cfrac{\begin{vmatrix} b_1 & \color{red}a_{12} \\ b_2 & \color{red}a_{22} \end{vmatrix}}{\begin{vmatrix} \color{green}a_{11} & \color{red}a_{12} \\ \color{green}a_{21} & \color{red}a_{22} \end{vmatrix}}

\\

y = \cfrac{\begin{vmatrix} \color{green}a_{11} & b_1 \\ \color{green}a_{21} & b_2 \end{vmatrix}}{\begin{vmatrix} \color{green}a_{11} & \color{red}a_{12} \\ \color{green}a_{21} & \color{red}a_{22} \end{vmatrix}}

\end{cases}

\end{equation}

$$

For 3-dimension, similarly, the volume of parallelepiped will be the base times the height. The volume can also be the determinant of the matrix consists of two basis vector $\hat i$, $\hat j$, and the third unknown vector. The area of the base is 1, so the volume of the parallelepiped is z-coordinate.

Then apply with a linear transformation:

The question becomes

$$

\begin{bmatrix}

\color{green}a_{11} & \color{red}a_{12} & \color{blue}a_{13} \\

\color{green}a_{21} & \color{red}a_{22} & \color{blue}a_{23} \\

\color{green}a_{31} & \color{red}a_{32} & \color{blue}a_{33}

\end{bmatrix}

\begin{bmatrix} x \\ y \\ z \end{bmatrix}

=

\begin{bmatrix} b_1 \\ b_2 \\ b_3 \end{bmatrix}

$$

Then we can solve for z:

$$

\begin{equation}

z = \cfrac{\text{Volume of Parallelepiped}}{|A|} =

\cfrac{

\begin{vmatrix}

\color{green}a_{11} & \color{red}a_{12} & b_1 \\

\color{green}a_{21} & \color{red}a_{22} & b_2 \\

\color{green}a_{31} & \color{red}a_{32} & b_3

\end{vmatrix}

}{

\begin{vmatrix}

\color{green}a_{11} & \color{red}a_{12} & \color{blue}a_{13} \\

\color{green}a_{21} & \color{red}a_{22} & \color{blue}a_{23} \\

\color{green}a_{31} & \color{red}a_{32} & \color{blue}a_{33}

\end{vmatrix}

}

\end{equation}

$$

Similarly,

$$

\begin{equation}

x =

\cfrac{

\begin{vmatrix}

b_1 & \color{red}a_{12} & \color{blue}a_{13} \\

b_2 & \color{red}a_{22} & \color{blue}a_{23} \\

b_3 & \color{red}a_{32} & \color{blue}a_{33}

\end{vmatrix}

}{

\begin{vmatrix}

\color{green}a_{11} & \color{red}a_{12} & \color{blue}a_{13} \\

\color{green}a_{21} & \color{red}a_{22} & \color{blue}a_{23} \\

\color{green}a_{31} & \color{red}a_{32} & \color{blue}a_{33}

\end{vmatrix}

}

,

y =

\cfrac{

\begin{vmatrix}

\color{green}a_{11} & b_1 & \color{blue}a_{13} \\

\color{green}a_{21} & b_2 & \color{blue}a_{23} \\

\color{green}a_{31} & b_3 & \color{blue}a_{33}

\end{vmatrix}

}{

\begin{vmatrix}

\color{green}a_{11} & \color{red}a_{12} & \color{blue}a_{13} \\

\color{green}a_{21} & \color{red}a_{22} & \color{blue}a_{23} \\

\color{green}a_{31} & \color{red}a_{32} & \color{blue}a_{33}

\end{vmatrix}

}

\end{equation}

$$

8. Change of Basis

If there is a vector sitting in 2-D space, we have a standard way to describe it with coordinate: $\begin{bmatrix} x \\ y \end{bmatrix}$. It means the vector is $x\hat i + y\hat j$.

What if we used different basis vectors?

For example, if there is a world using basis vector as $\vec b_1 = \begin{bmatrix} 2 \\ 1 \end{bmatrix}$ and $\vec b_2 = \begin{bmatrix} -1 \\ 1 \end{bmatrix}$, and there is a vector using their world language: $\begin{bmatrix} -1 \\ 2 \end{bmatrix}$

We can translate the vector into our language as $\vec v=(-1)\vec b_1+2\vec b_2=-1\begin{bmatrix} 2 \\ 1 \end{bmatrix}+2 \begin{bmatrix} -1 \\ 1 \end{bmatrix} = \begin{bmatrix} -4 \\ 1 \end{bmatrix}$

Is it a deja vu? We can use a matrix to describe it:

$$

\begin{equation}

\underbrace{\begin{bmatrix} -4 \\ 1 \end{bmatrix}}_{\underset{\scriptsize\textstyle\text{language}}{\text{vector in our}}}

=

\overbrace{\begin{bmatrix} 2 & -1 \\ 1 & 1 \end{bmatrix}}^{\text{translation}}

\underbrace{\begin{bmatrix} -1 \\ 2 \end{bmatrix}}_{\underset{\scriptsize\textstyle\text{language}}{\underset{\scriptsize\textstyle\text{their}}{\text{vector in}}}}

\end{equation}

$$

If we want to translate our language to their language, you just multiply an inverse A:

\begin{equation}

\underbrace{\begin{bmatrix} -1 \\ 2 \end{bmatrix}}_{\underset{\scriptsize\textstyle\text{language}}{\underset{\scriptsize\textstyle\text{their}}{\text{vector in}}}}

=

\overbrace{\begin{bmatrix} \frac{1}{3} & \frac{1}{3} \\ -\frac{1}{3} & \frac{2}{3} \end{bmatrix}}^{A^{-1}}

\underbrace{\begin{bmatrix} -4 \\ 1 \end{bmatrix}}_{\underset{\scriptsize\textstyle\text{language}}{\text{vector in our}}}

\end{equation}

However, vectors are not the only thing in coordinates.

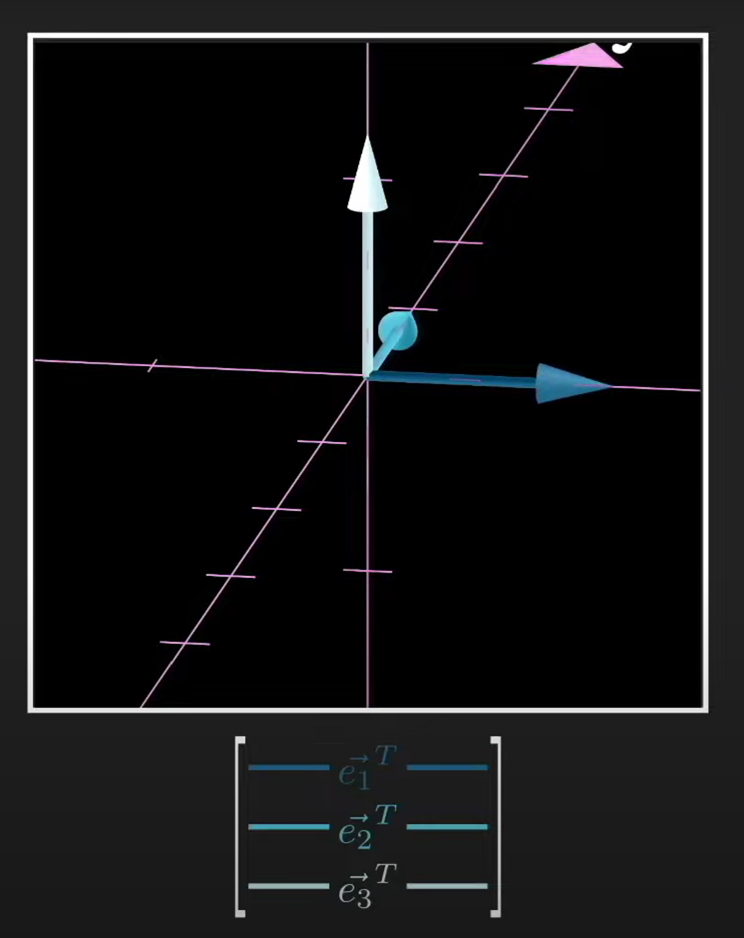

Consider some linear transformation, like a 90-degree counterclockwise rotation. The matrix in our language is $\begin{bmatrix}0 & -1 \\ 1 & 0 \end{bmatrix}$.

How to translate the same 90-degree counterclockwise rotation in their language? Multiplying the matrix with the translation matrix mentioned above is not right. Because the columns of the rotation matrix still represent our basis, not theirs. So multiplying the matrix with the translation matrix outputs a wrong matrix which is not really 90-degree counterclockwise rotation.

To translate the matrix, we can start with a random vector in their language: $\vec v$

In order to describe the rotation, we firstly translate it into our language:

$$

\overbrace{

\underbrace{\begin{bmatrix} 2 & -1 \\ 1 & 1 \end{bmatrix}}_{\text{translation}}

\vec v

}^{\text{vector in our language}}

$$

Then apply the transformation matrix by multiplying it on the left

$$

\overbrace{

\underbrace{\begin{bmatrix} 0 & -1 \\ 1 & 0 \end{bmatrix}}_{\text{Rotation}}

\begin{bmatrix} 2 & -1 \\ 1 & 1 \end{bmatrix}

\vec v

}^{\text{vector after transformation in our language}}

$$

Last step, apply the inverse matrix change of basis matrix, multiplied it on the left, to translate it back into their language.

$$

\overbrace{

\underbrace{\begin{bmatrix} 2 & -1 \\ 1 & 1 \end{bmatrix}^{-1}}_{\text{Translate back}}

\begin{bmatrix} 0 & -1 \\ 1 & 0 \end{bmatrix}

\begin{bmatrix} 2 & -1 \\ 1 & 1 \end{bmatrix}

\vec v

}^{\text{vector after transformation in their language}}

$$